- Preface

- 1 Introduction

- 2 The pillars of the Semantic Web

- 3 Web Ontology Language

- 3.1 Historical Notes

- 3.2 Ontology

- 3.3 Primitives of the OWL

- 3.3.1 An Ontology with OWL

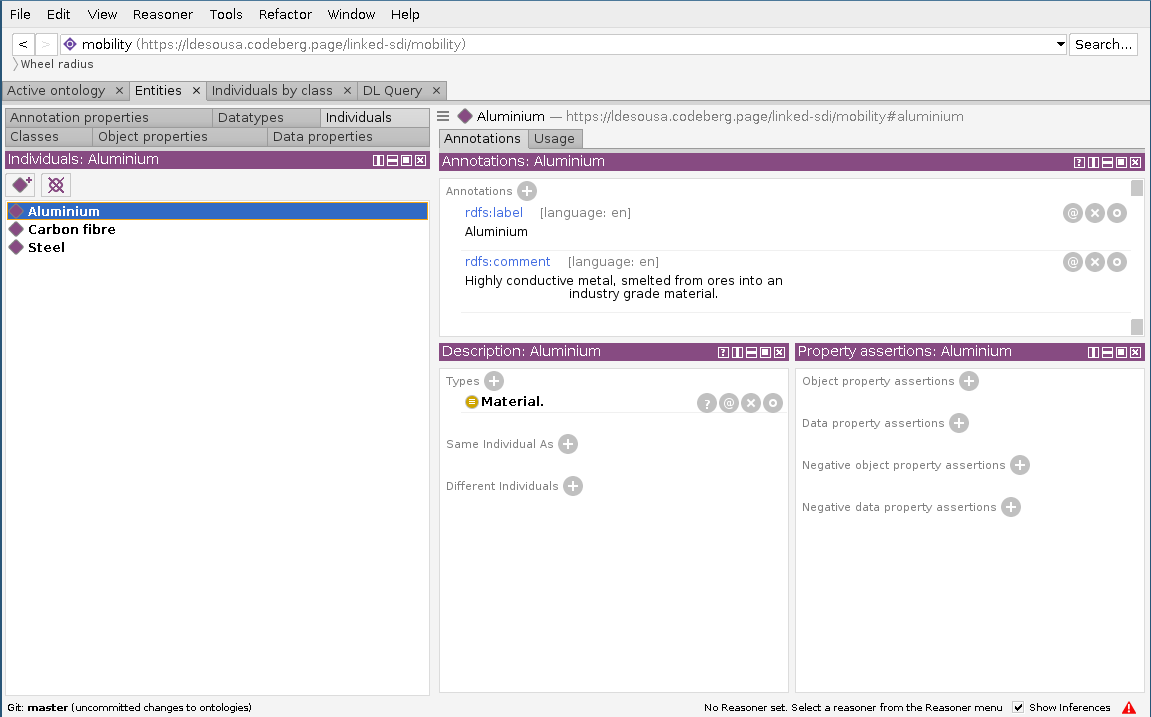

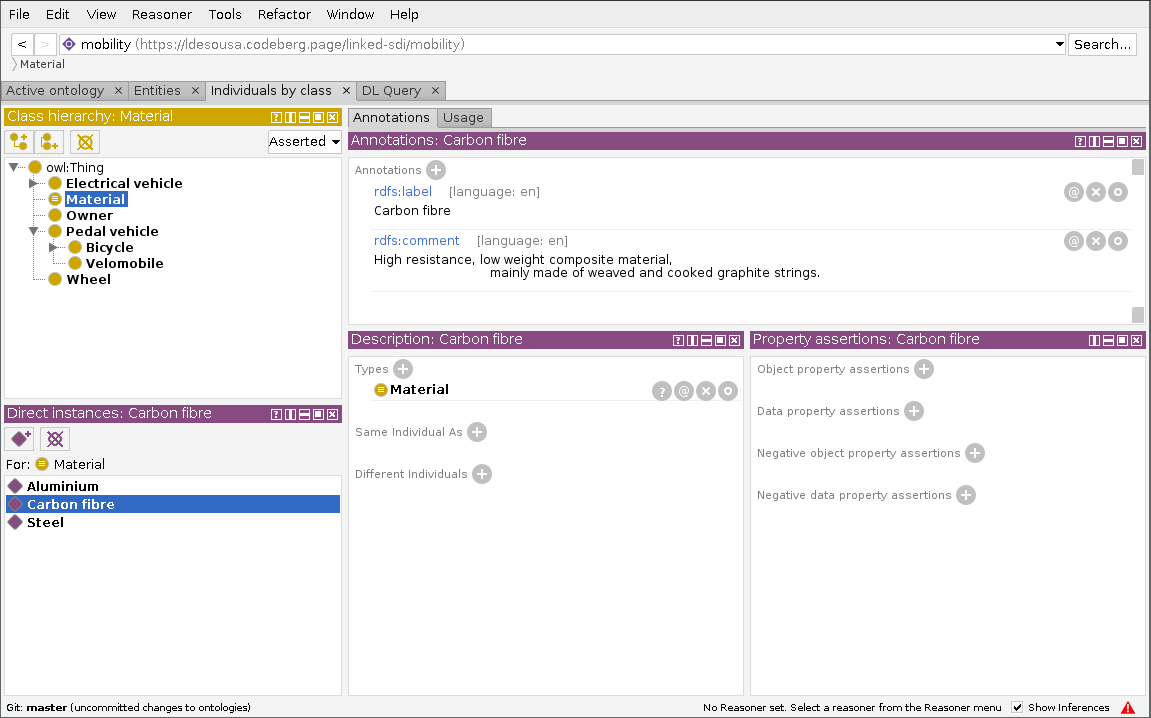

- 3.3.2 Classes and instances

- 3.3.3 Class instances and identifiers

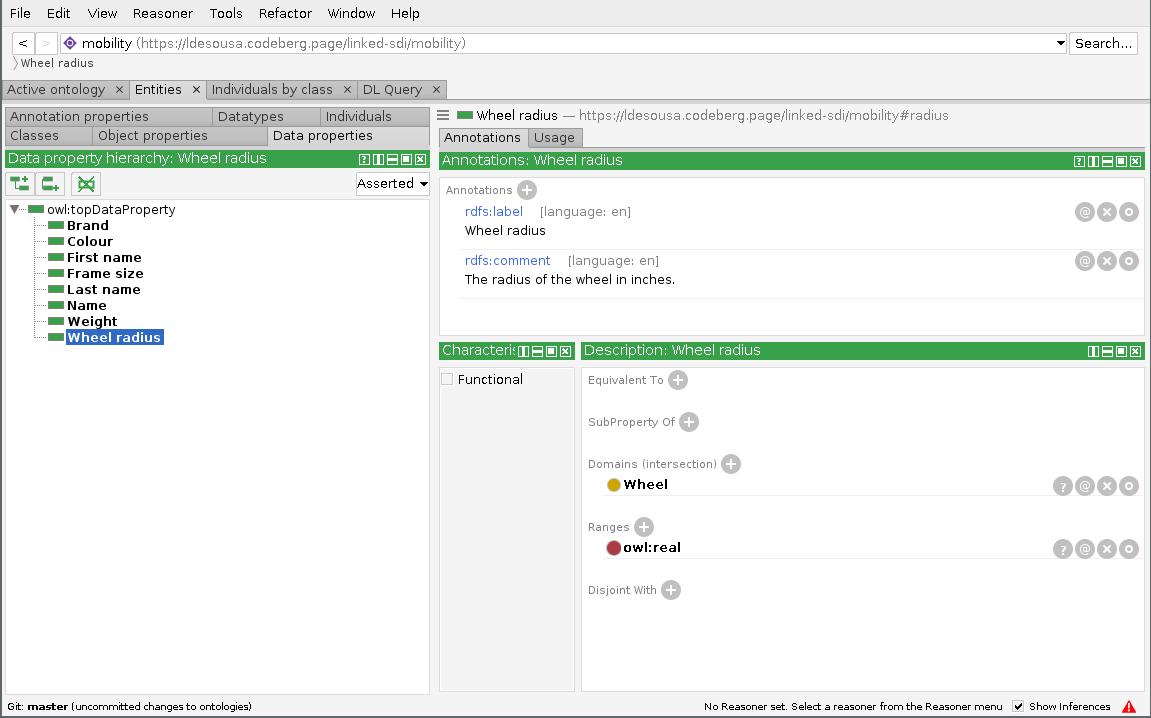

- 3.3.4 Class properties and literal types

- 3.3.5 Literal types

- 3.3.6 Literals facets

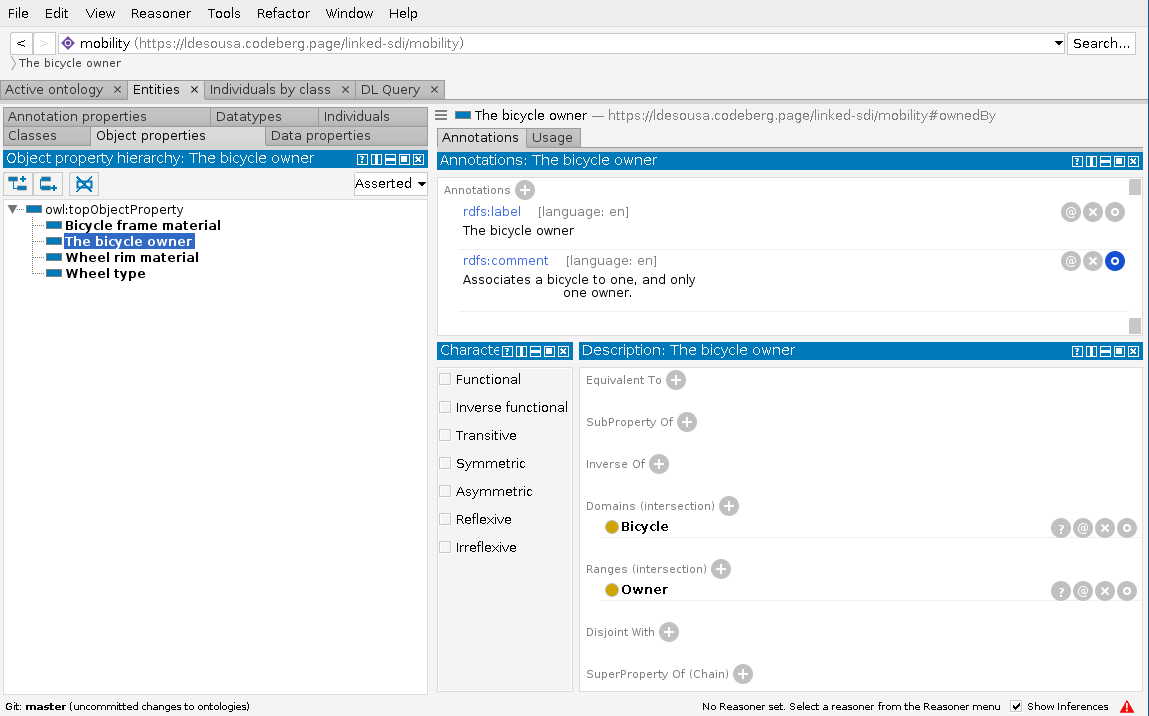

- 3.3.7 Relations and cardinalities

- 3.3.8 Special property restrictions

- 3.3.9 Enumerations

- 3.3.10 Generalisation

- 3.3.11 The Open World Assumption

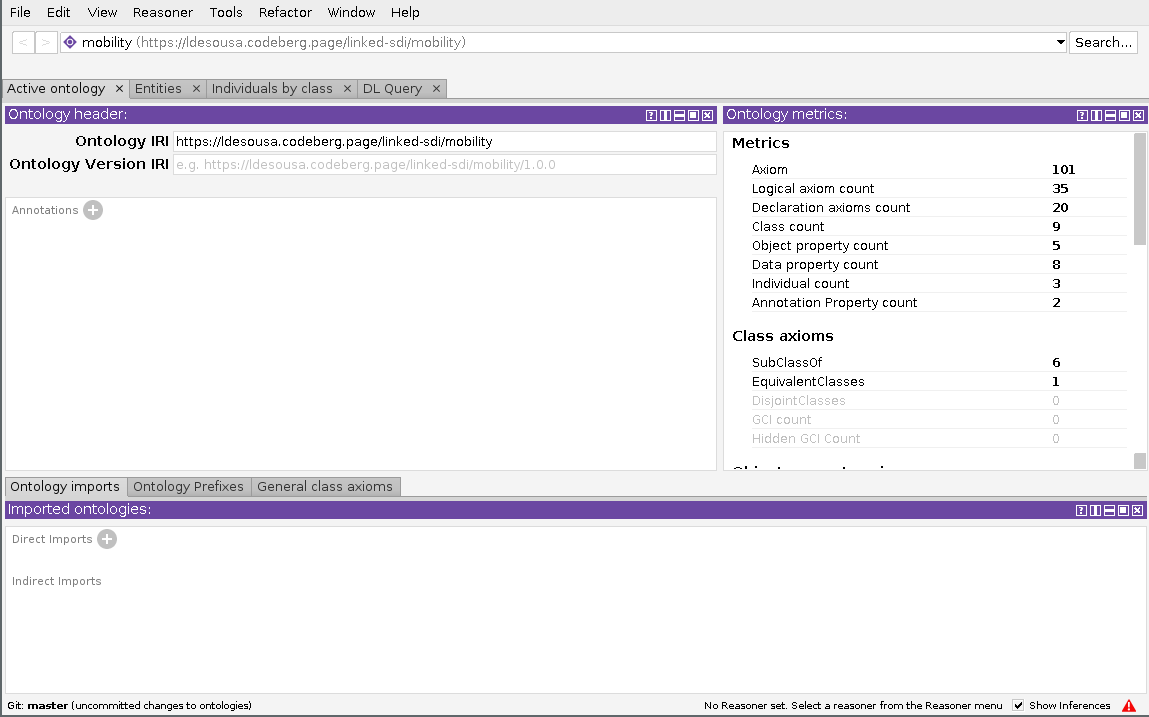

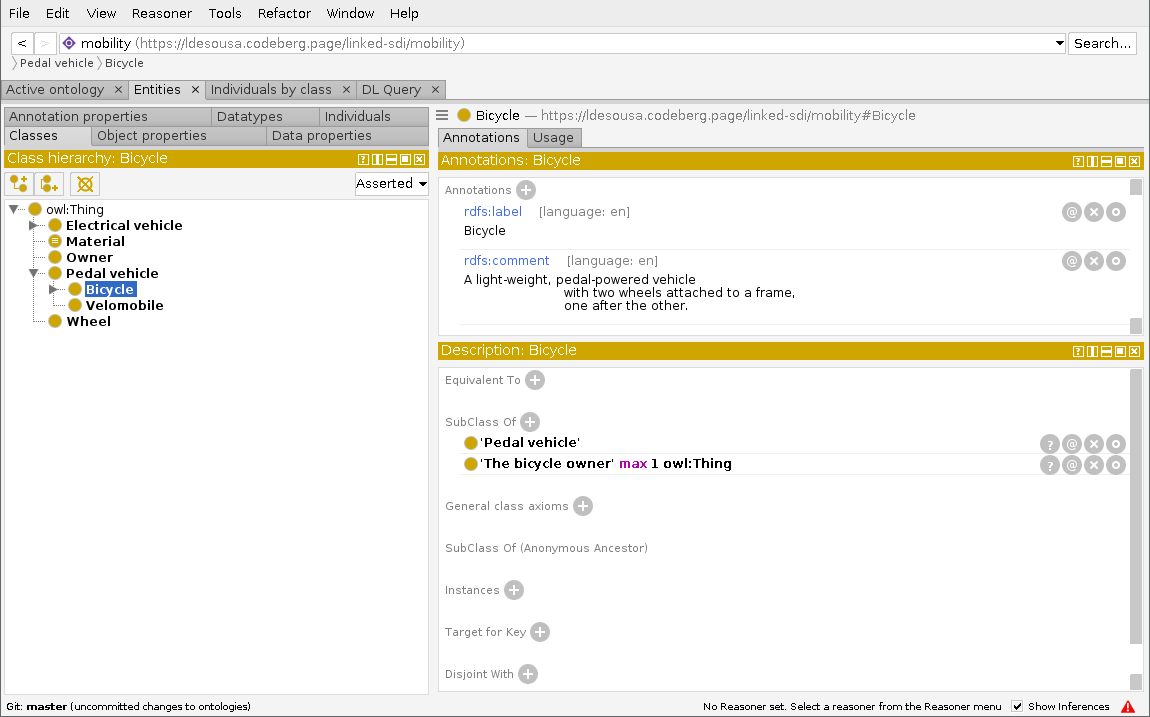

- 3.4 Working with Protégé

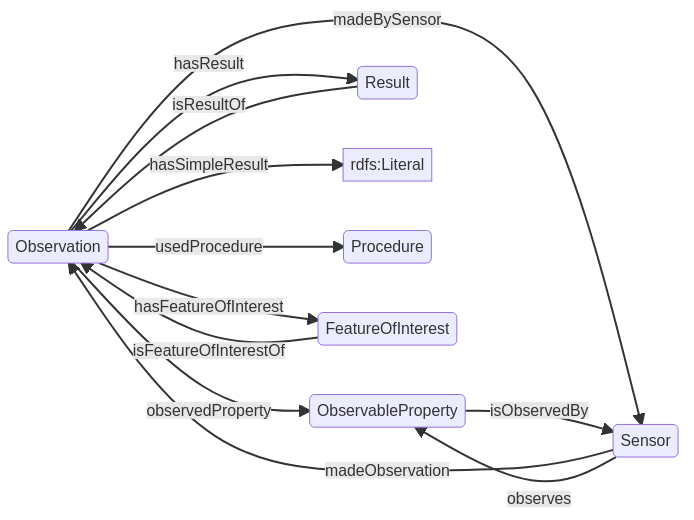

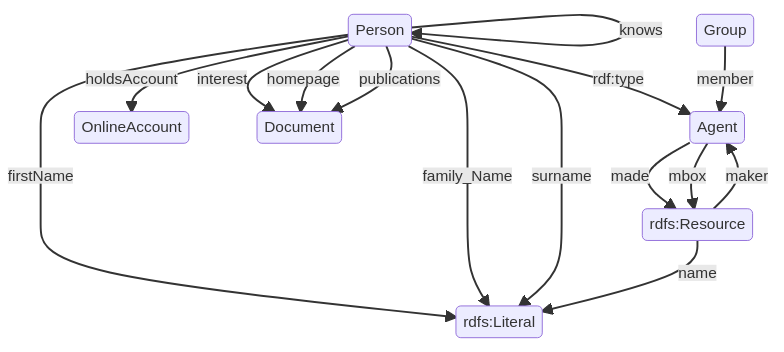

- 3.5 Notable Web Ontologies

- 3.6 The Cyclists knowledge graph

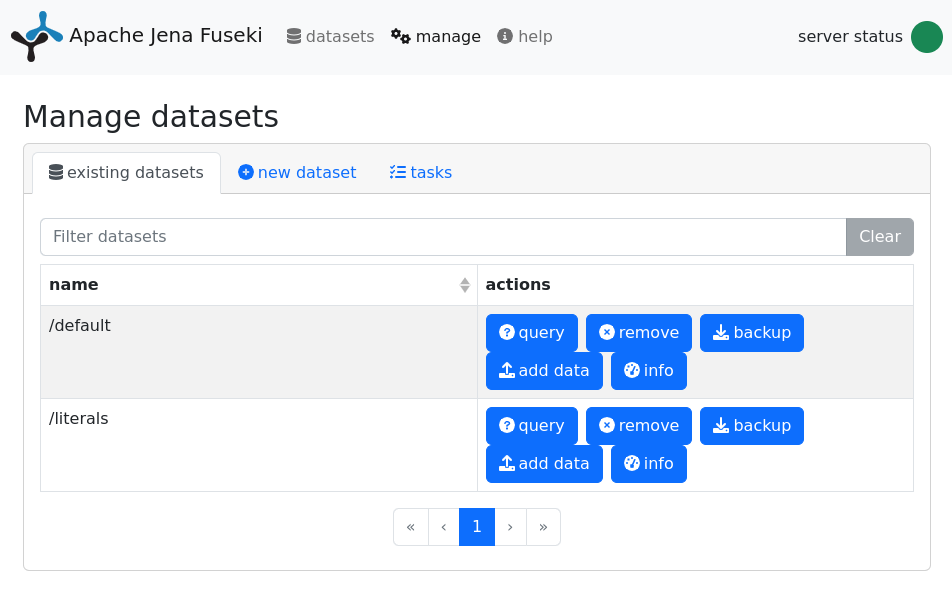

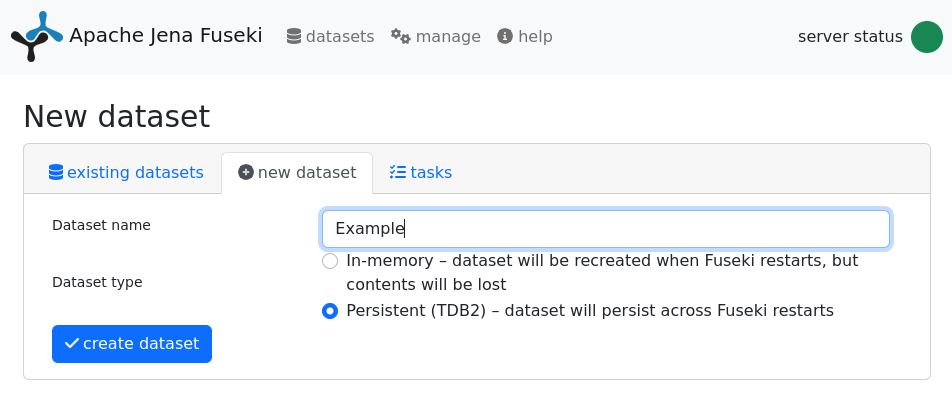

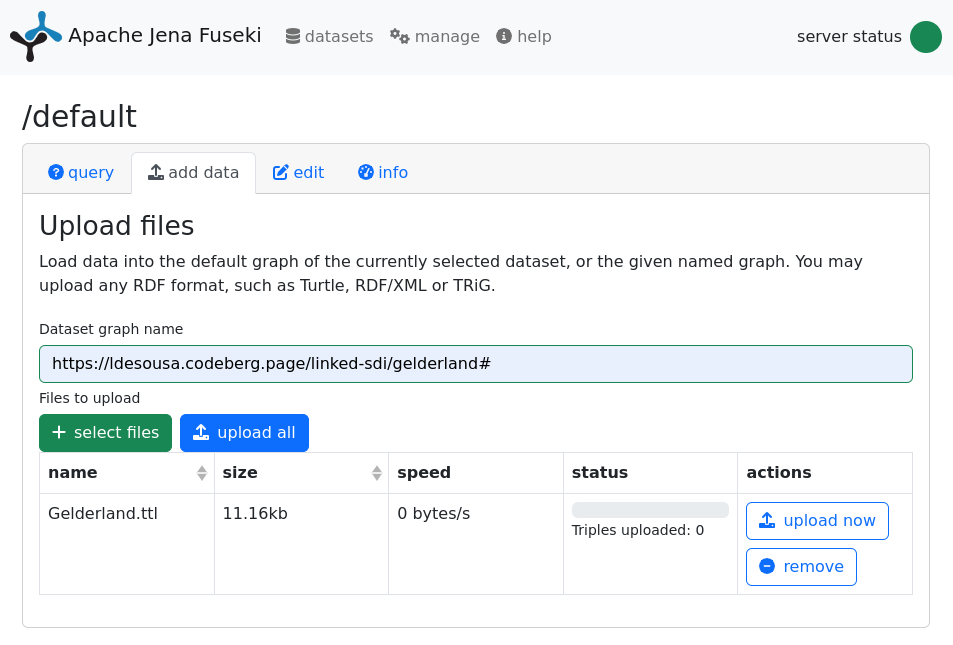

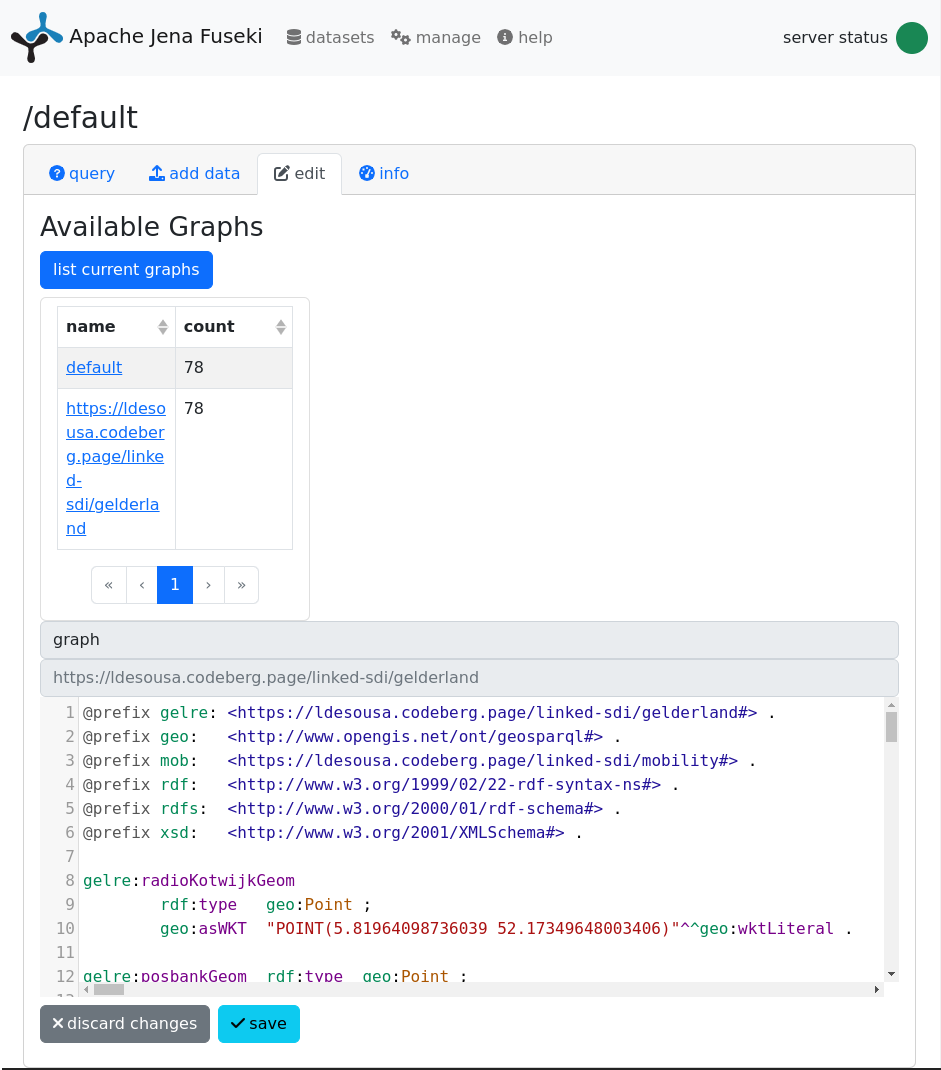

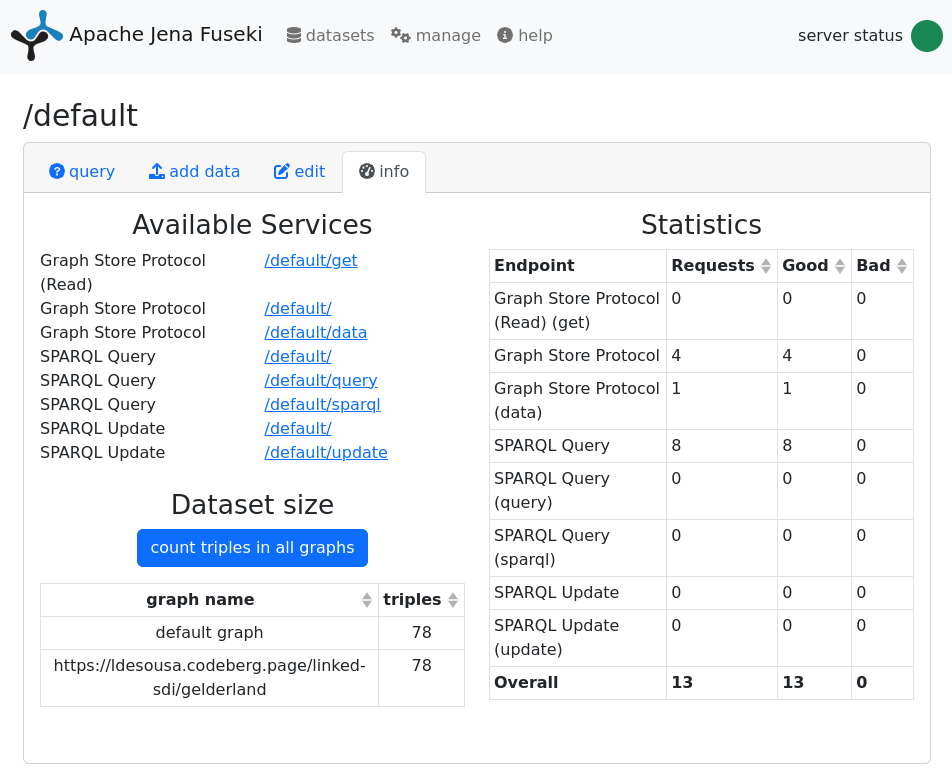

- 4 Triple Stores

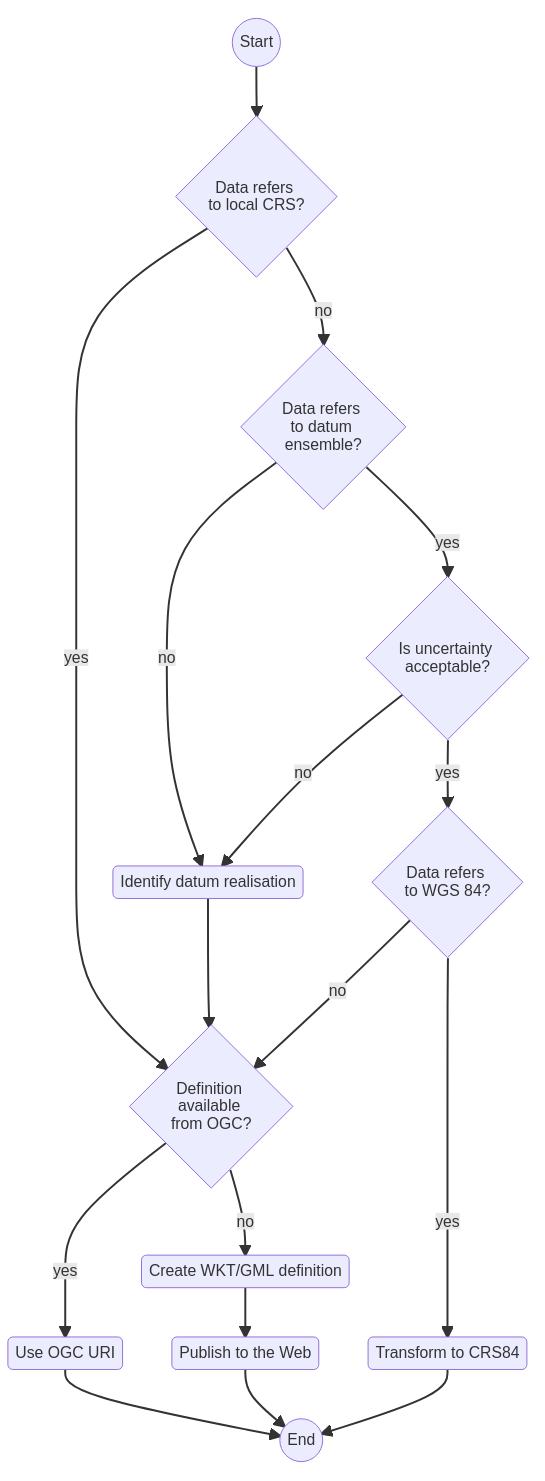

- 5 The SPARQL query language

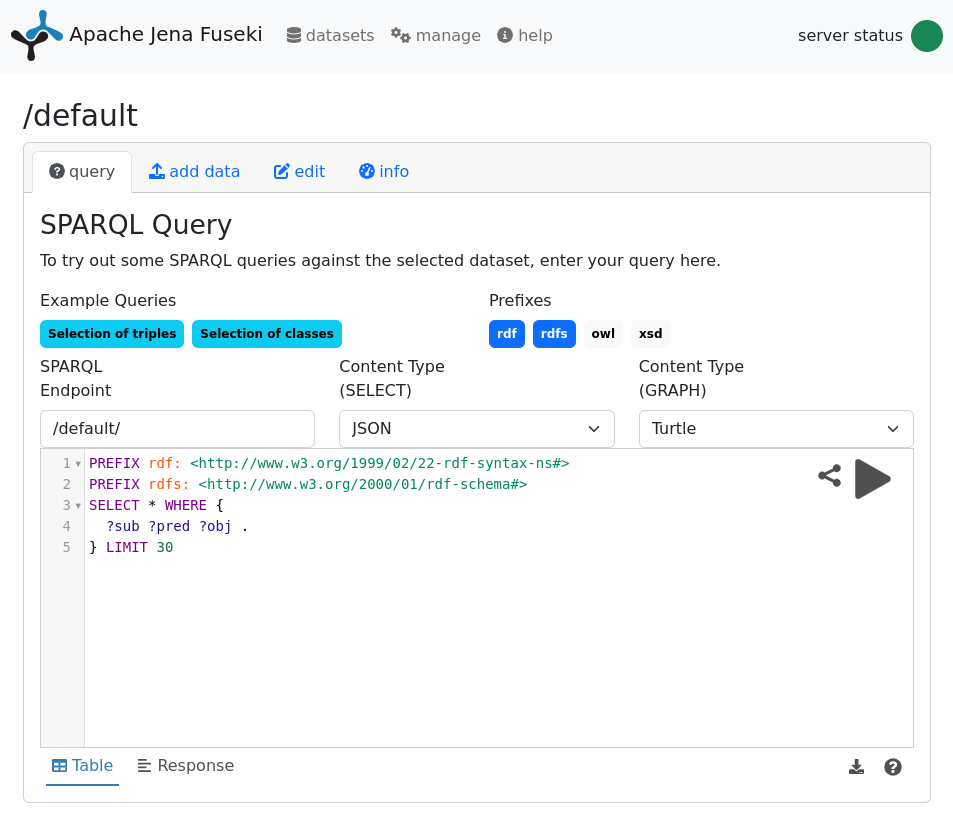

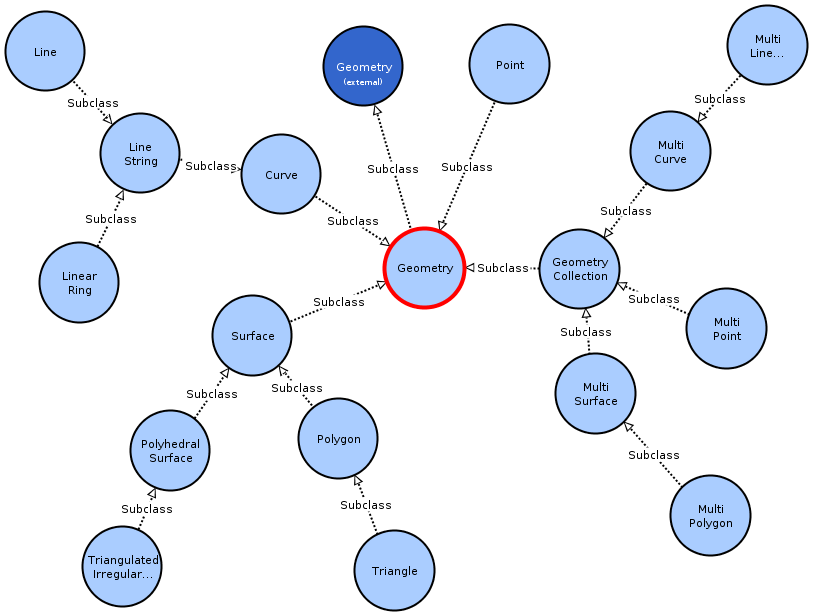

- 6 GeoSPARQL

- 7 Data Transformation

- 8 Data Provision

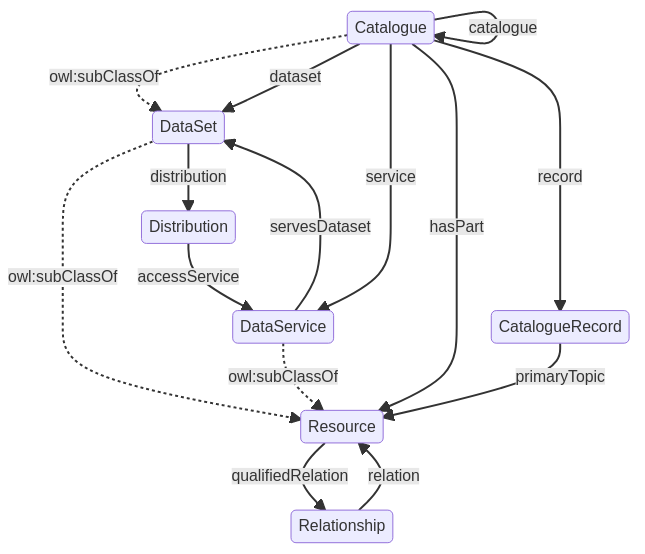

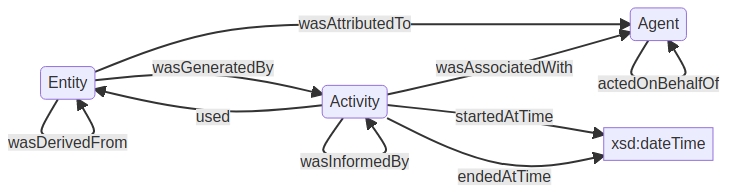

- 9 Meta-data

- 10 Rising trends

- Annexes

- Bibliography

Copyright © 2021-2024 Luís Moreira de Sousa

This work is made available under the licence:

CC BY-NC-ND – Attribution Non-Commercial No-Derivatives

Please consult the licence document for details:

https://creativecommons.org/licenses/by-nc-nd/4.0/

DOI: 10.5281/zenodo.13892963

Version 0.3

5th of October 2024

This book is available online at:

An electronic document can be obtained from:

https://zenodo.org/record/13892963

Preface

Objectives of this book

Welcome! If you picked up this book you are possibly interested in Linked Data, on Spatial Data Infrastructures (SDI), or both. As it happens, this book encompasses the two themes, or better worded, the merger of both.

This book aims to acquaint you with state-of-the-art standards, specifications and technologies that today provide a clear path to the provision and consumption of geo-spatial data on the web. But in a semantically expressive and unequivocal way, and tapping on all referencing infrastructure offered by the World Wide Web (WWW). The path meeting the FAIR (Findable, Accessible, Interoperable and Reusable) goals to what geo-spatial data is concerned.

If you are new to the Semantic Web and Linked Data in general, this manuscript might make for a good sequential reading, especially the first introductory chapters. However, it also intends to function as a handbook, to clarify a particular doubt, to look-up on a particular method or to find a recipe setting up a necessary technology.

After reading this book you should become comfortable using ontologies, transforming data into a linked paradigm with sound semantics, querying linked data services and setting up data storage and provision infrastructures. All making use of best practices and standards issued by authoritative institutions such as the W3C and the OGC. All relying exclusively on open source software and tools.

Recent developments in data infrastructures, with novel data access paradigms based on the Open API and OData specifications, coupled with developments in the Semantic Web towards geo-spatial data are opening a new era in this domain. This book aims to be your gateway to that exciting new SDI world that now unfolds.

Intended readers

The primary target of this book are geo-spatial practitioners and scientists. The users of data services and APIs and those that set up such data access mechanisms. Data providers, be it in science, industry or public administration that wish their work to reach users according to the highest standards of quality and accessibility (e.g. FAIR).

But since this book starts by providing a general introduction to the Semantic Web and Ontology, its readership is effectively broader. The first half of this manuscript provides sufficient content for a general course on these topics, and their positioning within the wider domains of computer science and data science.

Structure

The book starts with a general overview of the motivations to adopt a Linked Data approach to geo-spatial in Chapter 1. It reviews current trends triggered by the W3C, the work of the OGC towards data access APIs and the FAIR data initiative. It goes on to pitch the Semantic Web as the vehicle fulfilling this approach.

The pillars of the Semantic Web are laid out in Chapter 2. This chapter makes you familiar with specifications such as the Unified Resource Identifier (URI), the Resource Description Framework (RDF) and the Web Ontology Language (OWL). In this chapter you can learn what are triples and the different ways of encoding them, particularly with the Turtle syntax.

Chapter 3 dives into the realm of Ontology and its application to information science. It provides the building blocks of the OWL and with a simple example guides you through the development of a web ontology from scratch. This chapter also includes instructions on different tools, both to develop and systematically document a web ontology. Finally it introduces good practices on ontology reuse, a key aspect of the Semantic Web.

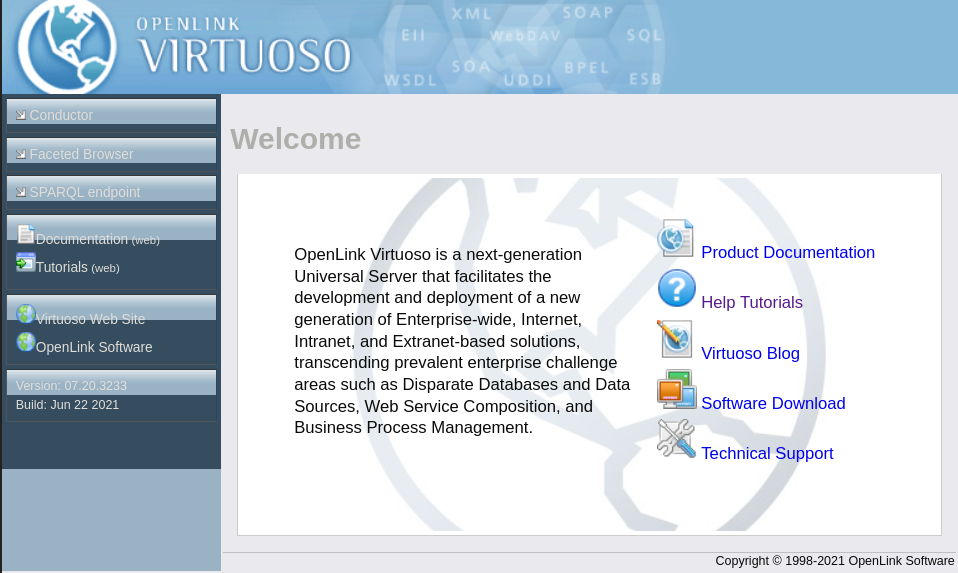

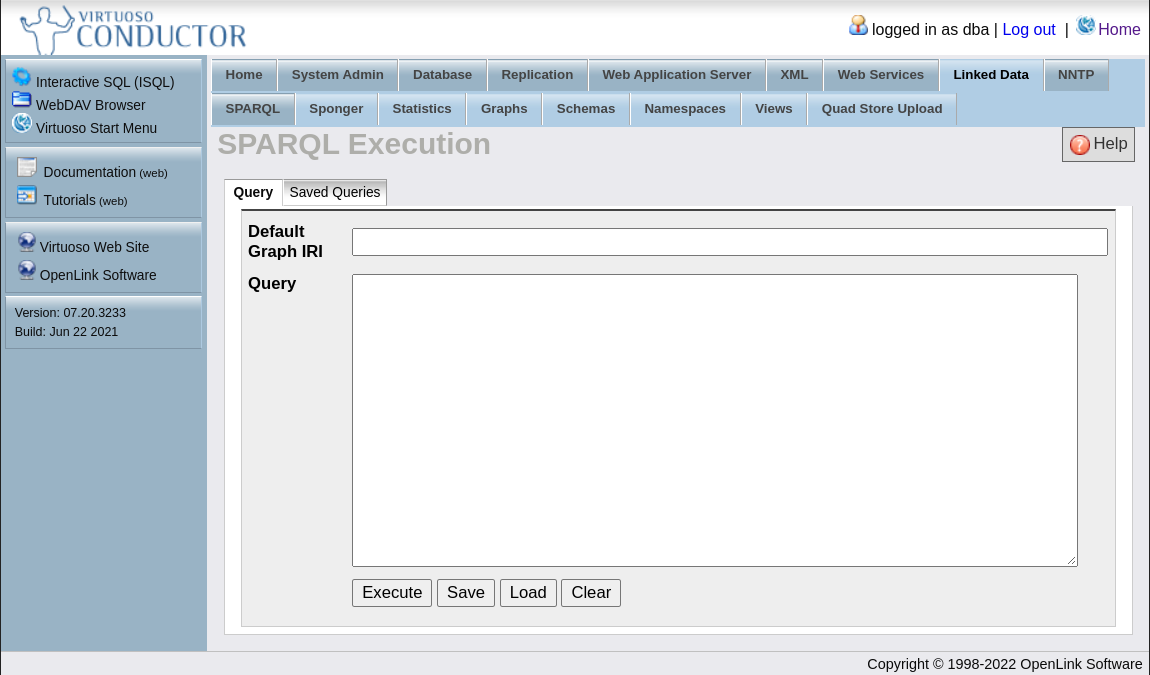

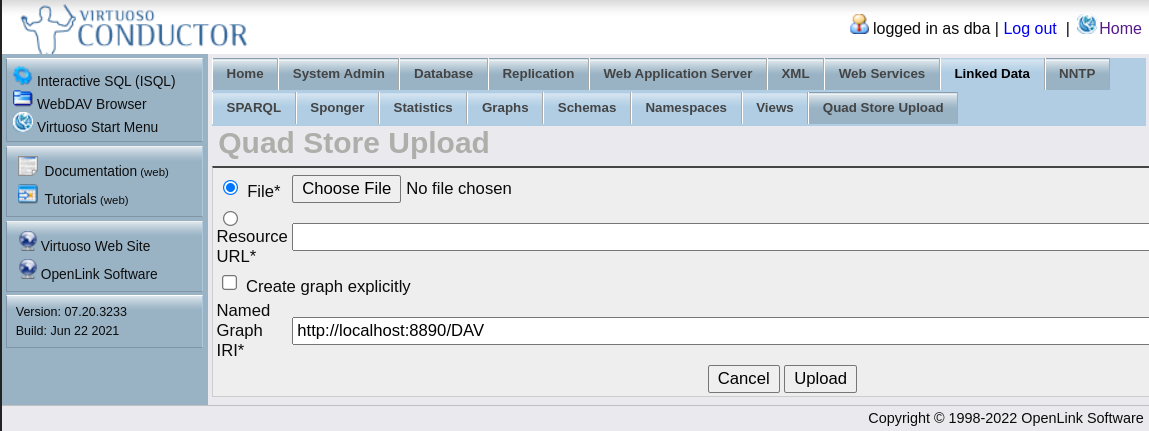

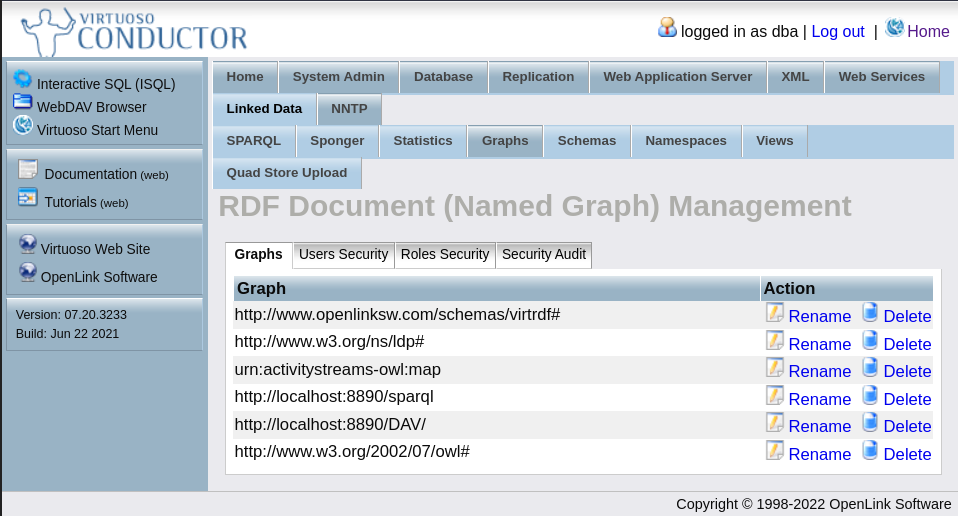

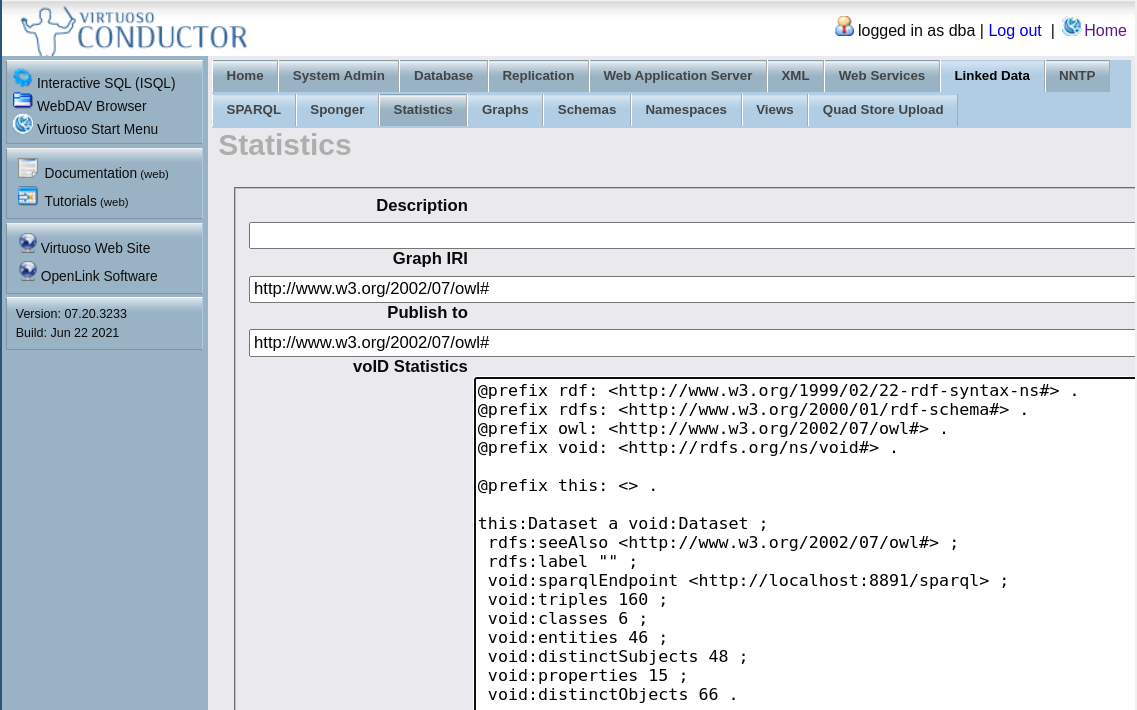

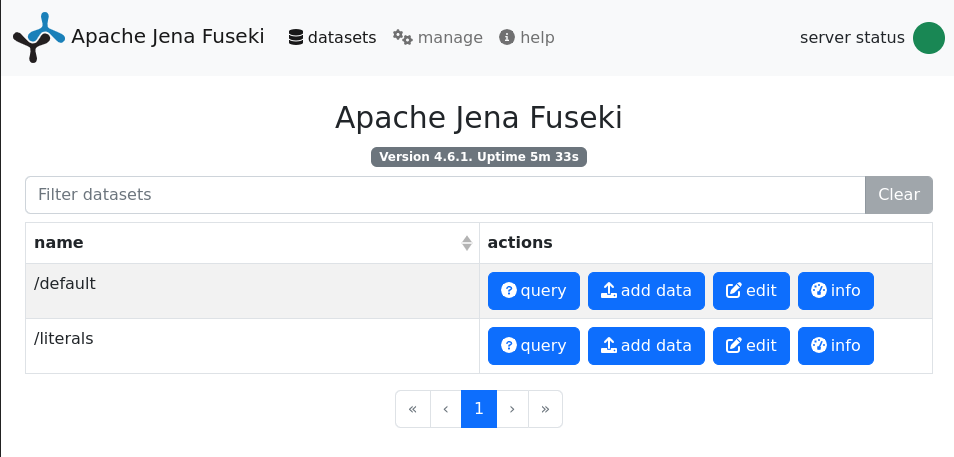

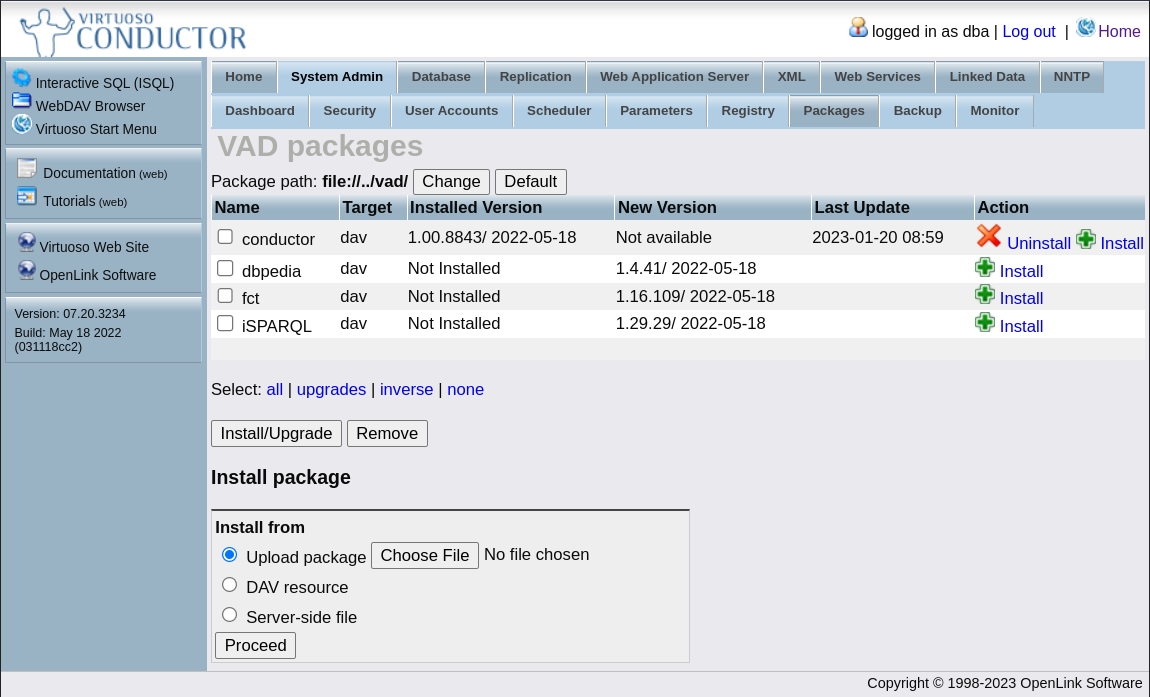

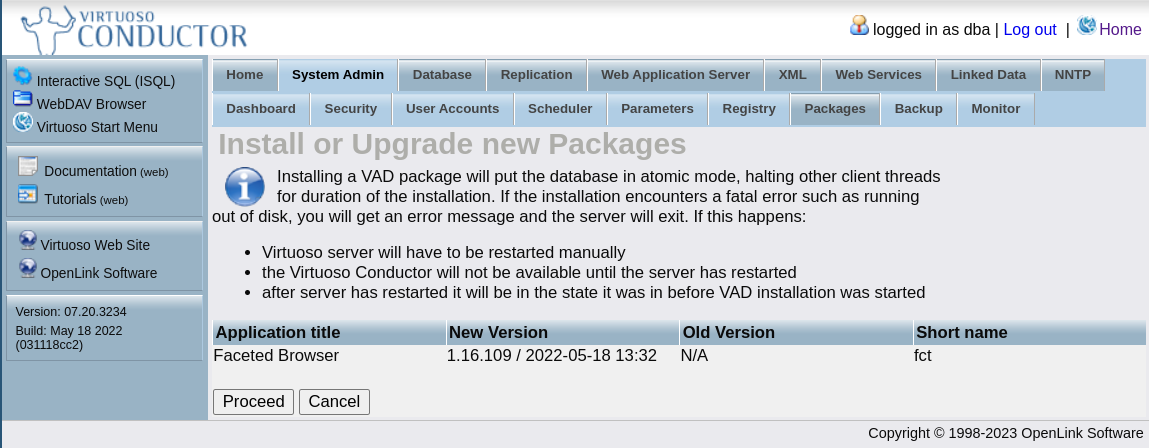

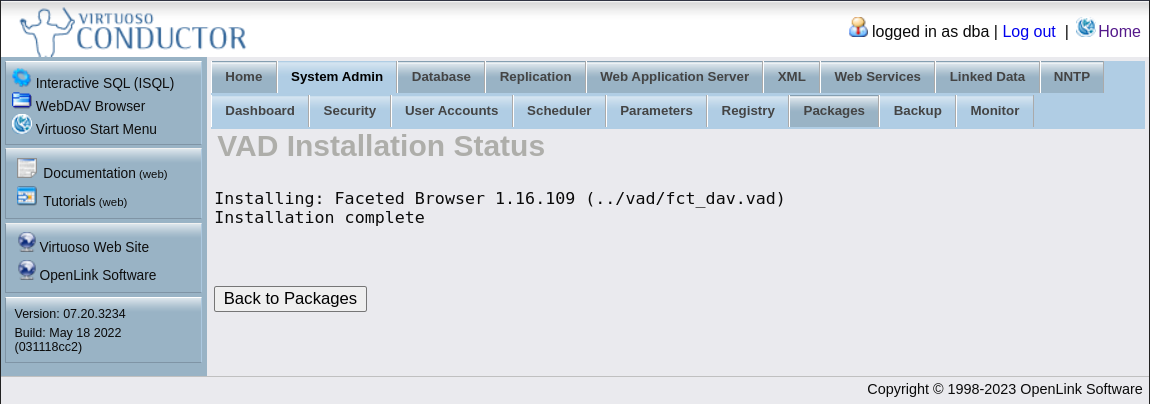

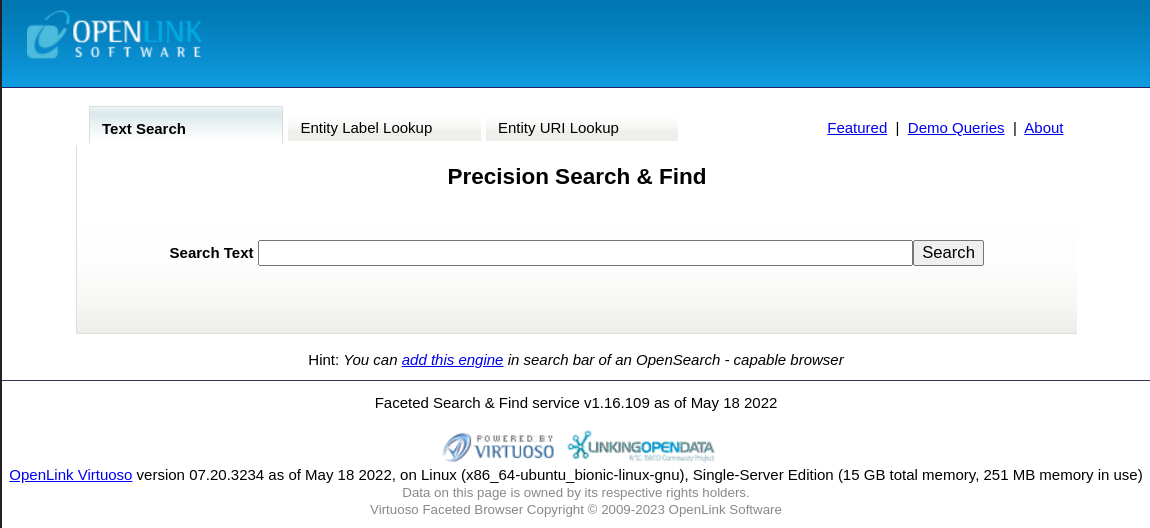

The storage of RDF triples is covered in Chapter 4. Two open source technologies deserve detailed attention: Fuseki and Virtuoso. After reading this chapter you should be comfortable using both as back-end to your linked SDI.

You start to get your hands dirty in Chapter 5 with an introduction to the SPARQL query language. A collection of examples slowly makes you comfortable with the language, from obtaining simple information, to retrieving aggregates, to complex queries creating new sets of RDF triples.

With the basics of the Semantic Web introduced, the book finally delves into the geo-spatial domain in Chapter 6. The GeoSPARQL ontology is thoroughly reviewed, again with an example detailing the development of a geo-spatial web ontology. The query language aspect of GeoSPARQL is also visited in this chapter, with an exhaustive review of all geo-spatial functions defined in the standard.

In all likelihood, the data you currently work with does not exist in the form of triples, but Chapter 7 is here to help. In it you can learn various methods to transform relational and tabular data to RDF triples. Again all based on open source technologies.

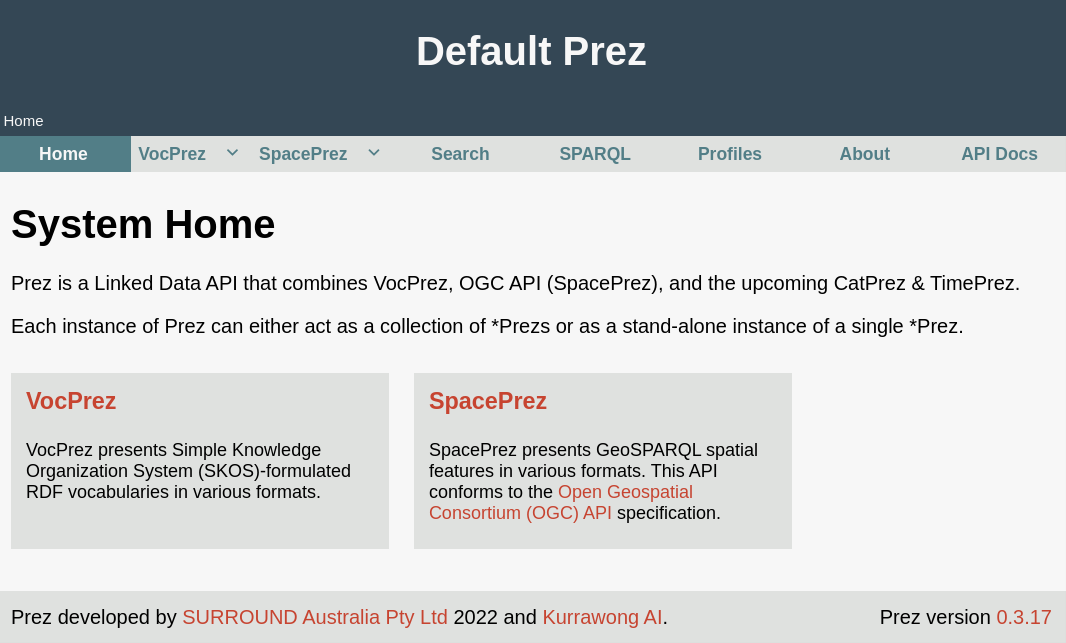

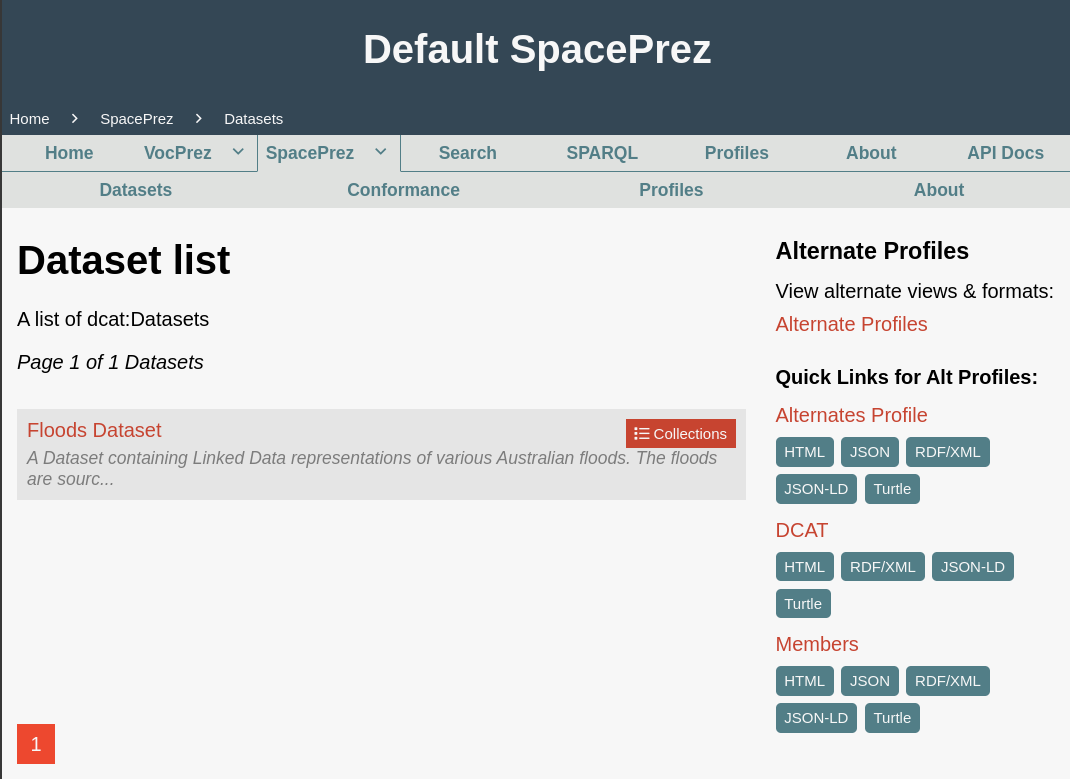

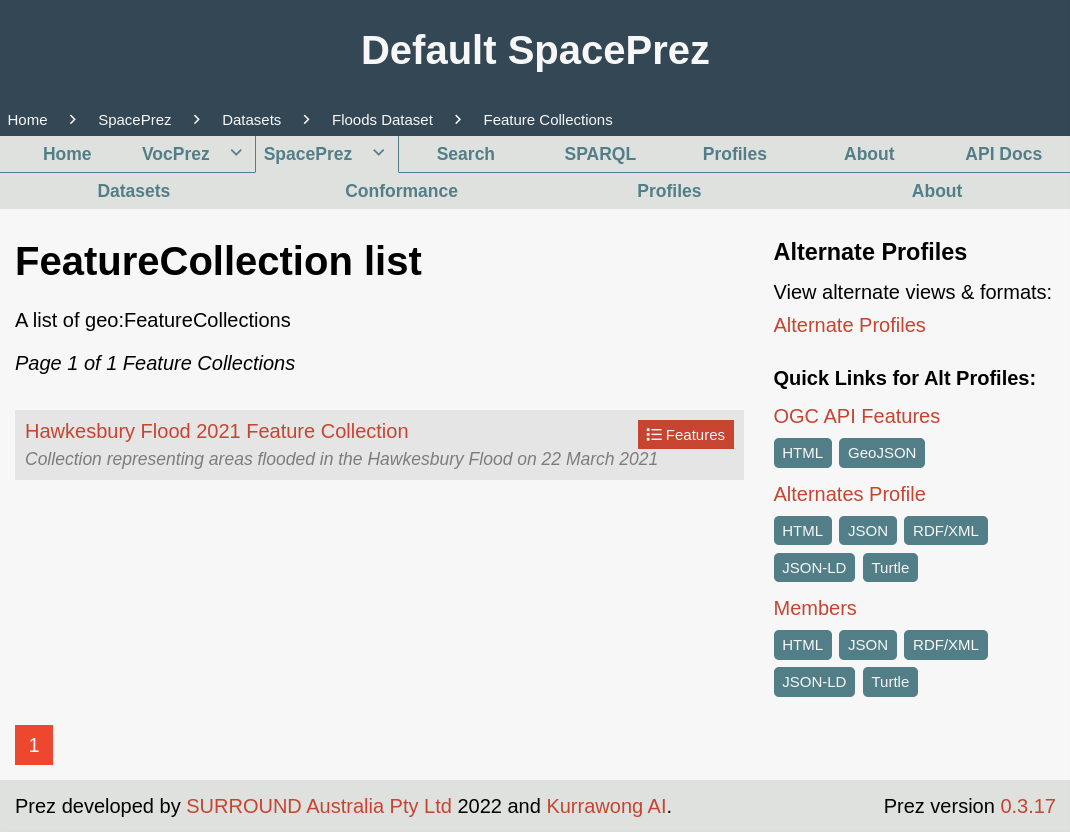

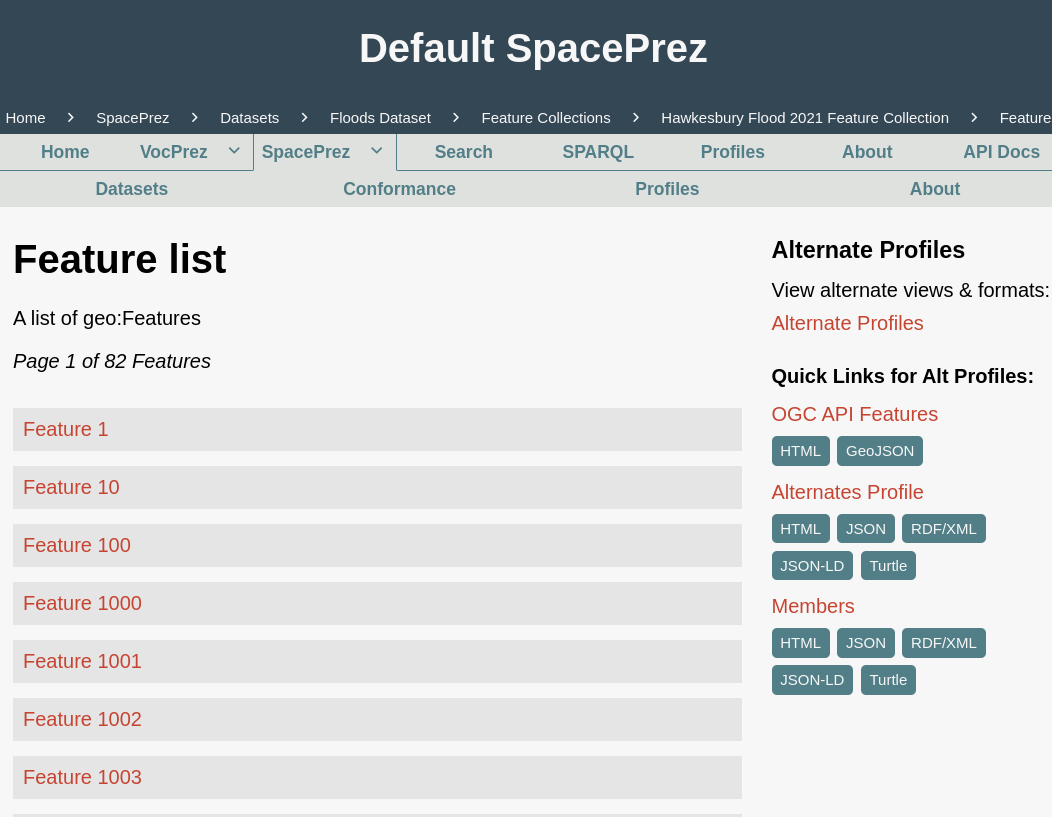

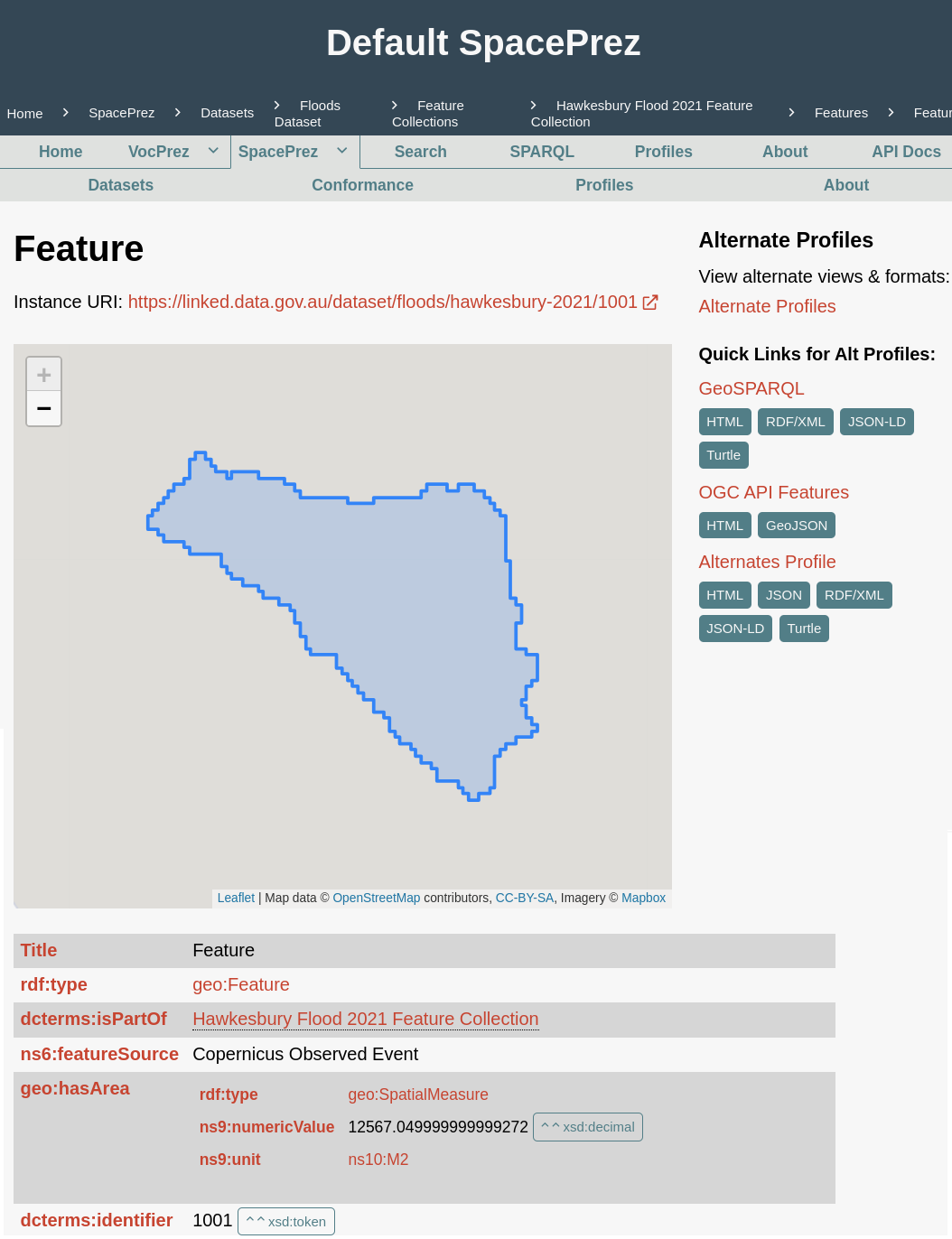

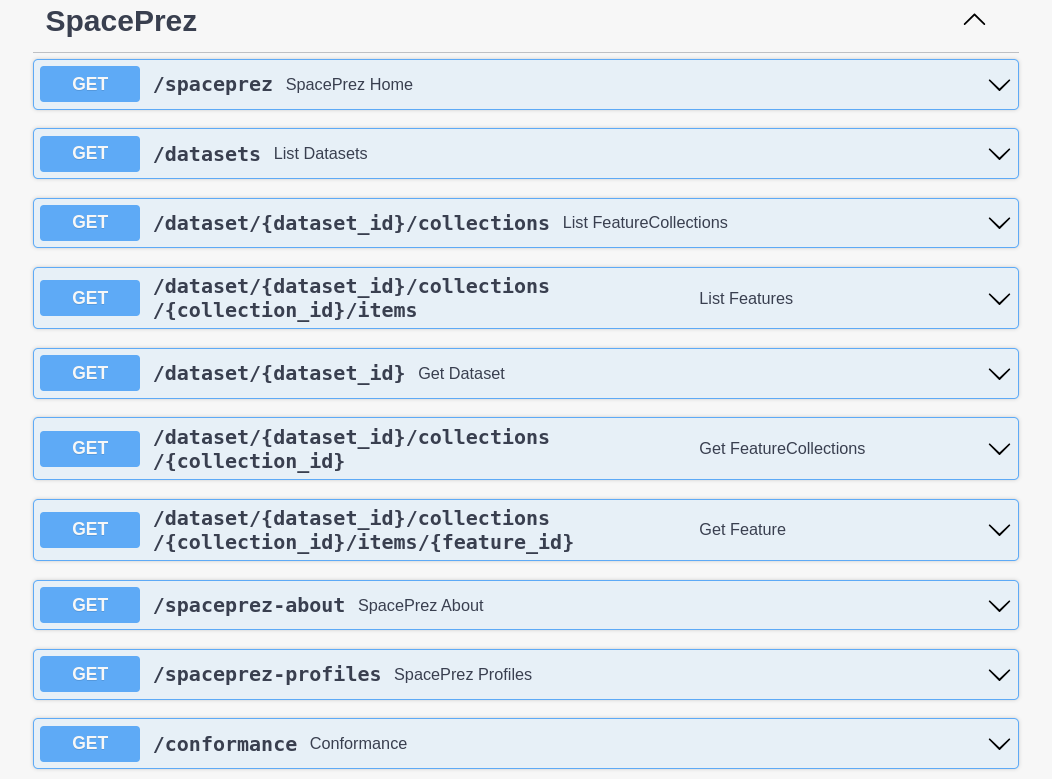

With storage and transformation consolidated, it is the turn of data provision, tackled in Chapter 8. A number of methods and technologies are reviewed, with the role of the novel OGC APIs explored in more detail, particularly through the groundbreaking Prez open source server.

And since data is of no value without meta-data, the book culminates with that topic in Chapter 9. The Semantic Web is well matured in this field, offering multiple ontologies that can be combined into a rich and purposeful cataloguing of geo-spatial datasets.

Before departure there is space for a few observations in Chapter 10 on where geo-spatial Linked Data may be headed next. Emerging directions of development are briefly sketched, so you may evolve your Linked SDI in a suitable path.

Acknowledgements

I would start by acknowledging the role Jorge Mendes de Jesus had on the development of this book. It was his endearing crave for novel technologies that eventually lead me to dive seriously into the Semantic Web. Throughout the past decade his insights and experiments have constantly challenged my own understanding, definitely contributing to propel my career.

Rául Palma and Bogusz Janiak also contributed mightily to the fruition of this manuscript, even if indirectly. Working with them put me in contact with best practices in the Semantic Web, plus myriad technologies that truly enable the Linked Data paradigm. It was from the cooperation with Rául and Bogusz that I realised the need for this book.

Important was also the space created at ISRIC to pursue a Linked Data agenda. While much to the initiative of Jorge Mendes de Jesus, it were Bas Kempen and Fenny van Egmond who fostered research and experiment on this field. Their work eventually coalesced on the GloSIS web ontology and all consequent developments in soil ontology and data exchange.

Finally I thank those individuals that supported me on a personal level throughout this period. Beyond my family I would name Susana, Jeroen, Amy, Ian, Marisa, Christiane and Daphne.

1 Introduction

This chapter offers a first contact with the key aspects of Linked Data and the Semantic Web. Even if you are already familiar with both paradigms it is important to fully understand the impact they have on data exchange and use. And on the particular case of the geo-spatial data, the Semantic Web is bringing about changes that are nothing short of a revolution.

1.1 What is Linked Data?

Mostly likely, as you open this book, you already have some understanding of what Linked Data means. The term coined by Tim Berners-Lee in 2006 has become a household name, both in computer science as in data science. Even if that is the case, it is important to understand what Linked Data stands for and why it is significant. If you never heard the term before do not fear, hopefully these pages provide a simple enough introduction.

1.1.1 My data

Data. For most folk working in data science or even GIS, data equates to a flat table, possibly with field names in the first row and values in the reminder. Spreadsheets, data frames, the names are many to signify this basic and largely unstructured construct. The problems with such a frugal paradigm are many, but the concern here is the actual meaning of each datum, which goes well beyond the format.

Consider Table 1. It presents a data fragment with various columns. What exactly does it represent? There are geographic coordinates and dates and possibly some kind of measurement. Longitude and latitude are obvious terms, but to which geodetical datum do they refer? One can assume the WGS84, but on which epoch? Then there is the date, since the columns are written in English one can assume it refers to the Gregorian calendar, but some countries use a different calendar. Finally there is the Height column, beyond understanding it as a measure few other conjectures can be made. The height of what? Measured on which units?

| Lon | Lat | Date | Height |

|---|---|---|---|

| 43.1 | -19.2 | 5.0 | |

| -101.9 | -32.7 | 2010-01 | 3.2 |

Perhaps you have dealt with similar situations in the past. In fact the difficulties in identifying the true meaning of data is one of the contributing factors to the emerging informal discipline of “data wrangling”. Before feeding data to their processes, data scientists must correct errors, remove redundant and incomplete records, and consolidate datasets from different sources. Without the precise meaning of each datum and datum class, this work becomes far more complex and laborious.

A survey conducted by Crowdflower in 2016 revealed that data scientists spend up to 80% of their work time on data wrangling (Crowdflower 2016). This high figure has since been contested, but subsequent surveys have pointed to this being indeed the activity on which data scientists spend the majority of their time (Anaconda Inc. 2020). It is not statistical analysis, predictive modelling or even data representation that occupies the life of data scientists. Most of the time they are just trying to figure out what the data are and how to use them.

Essentially, Linked Data aims to address these problems. Make data easy to discover, identify and understand. If instead of simple names, the first row of Table 1 contained hyperlinks to detailed and universal definitions of those quantities the life of the data scientist would be greatly simplified. Linked Data is not so much about the links, but rather about making data unequivocally and universally understandable. Keep this simple concept in mind, the details of the how will flow throughout this book.

1.1.2 Principles

Linked Data was a concept proposed to fully express the impact of the Semantic Web on data exchange (Tim Berners-Lee 2006). Its broad idea is to present data on the web not as a set of enclosed silos, but rather as a network. It is a different paradigm to represent and exchange data. While it may appear alien, it is rather powerful and closer to how humans think and information exists in the real world. Today the principles of Linked Data can be resumed into three core ideas:

Data are primarily represented by links. Therefore every datum that is not a literal points to a resource providing further meaning or context. Record identifiers, units of measurement or concerned variables, all are represented with links leading to their precise definition and interpretation.

Data relate in networks. As every non literal is a link, data are arranged in a network. And different networks connect to each other building large constellations of data.

Data is readable by both humans and machines. Linked data form large networks of information on the internet that computer programmes may easily browse. However each link resolves to a resource (e.g. document) that is directly interpretable by humans.

The vision of data as a network of information is not at all abstract. In the real world information does not exist in silos, and has always multi-dimensional relations within itself. Humans capture information leaning it down to a convenient form. For most readers data equates to tabular records like the one in Table 1, possibly not even normalised. Linked Data is completely different, it is not laid out in tables and records, they build networks, or as they are more commonly known: Knowledge Graphs.

1.1.3 The Semantic Web

You may have already heard of the Semantic Web (SW), possibly even as a synonym of Linked Data. In fact it is an umbrella term encompassing standards and specifications issued by the World Wide Web Consortium (W3C) (Tim Berners-Lee, Hendler, and Lassila 2001). The Semantic Web is an infrastructure realising the broad vision of Linked Data, but admittedly the latter may exist without the former.

Chapter 2 reviews in the detail the main building blocks of the Semantic Web. In general terms its character can be synthesised into:

URIs embody links: links follow a determined structure, and may have different nature. They also function as unique identifiers in the World Wide Web (WWW).

Data are expressed as triples: the atomic datum element reflects human speech but is also understandable by machines.

Ontologies are expressed as triples: the same paradigm expresses both data and ontological meaning. Ergo, ontologies are machine readable.

Data sources are all linked in a federation: any data source in the Semantic Web can be combined with any other, not matter how many. Be it to reason upon the data or simply to retrieve relevant sub-sets of data.

Everything is allowed unless explicitly forbidden: data can be expressed and used in any way or form convenient to the end user (human or machine), as long as ontological restrictions are met.

While in the present day Linked Data is sometimes perceived as a broader concept, this book takes solely the Semantic Web path to geo-spatial data on the web. It will take you from the core specifications that intertwine with the WWW itself, through the theoretical foundations of ontological expression as Linked Data and then into the practical specifications making for the provision and consumption of geo-spatial data.

1.2 The Impact of the Semantic Web

1.2.1 The Five Star ranking and Linked Open Data

At the same time he proposed the concept of Linked Data, Tim Berners-Lee also put forth a five star rating system to guide data providers (Tim Berners-Lee 2006). This system thus presents a series of steps data providers must take to render their data truly web enabled, truly Linked Data (Figure 1). The Five Start Data rating system is summarised in Table 2.

| * | Available on the web (whatever format) but with an open licence, to be Open Data. |

| ** | Available as machine-readable structured data (e.g. Microsoft Excel instead of image scan of a table). |

| *** | Two stars plus non-proprietary format (e.g. CSV instead of Microsoft Excel). |

| **** | All the above plus: use open standards from the W3C (RDF and SPARQL) to identify things, so that people can point at your data. |

| ***** | All the above, plus: link your data to other people’s data to provide context. |

Berners-Lee went further to define the restrict result of the Five Start Data ranking as Linked Open Data (LOD). Without an open licence data may be linked, but cannot be used freely by everyone. Closed Linked Data can be relevant and useful within corporate contexts, but it is not usable by third parties. The Five Star Data ranking system eventually became synonym with LOD.

A dedicated web site has been created to help promoting the concept of Linked Open Data 1. It summarises good practices, links to training contents and provides successful examples of LOD provision.

1.2.2 Spatial Data on the Web Best Practices

In the geo-spatial domain the “game changer” would result from a joint W3C-OGC initiative embodied by the Spatial Data on the Web Working Group (SDWWG). In 2017 this work group published a report titled “Spatial Data on the Web Best Practices”, that brought into question the overall philosophy behind the OGC’s standards for digital data provision (Tandy, Brink, and Barnaghi 2017). Standards such as the Web Mapping Service (WMS), Web Feature Service (WFS) or Web Coverage Service (WCS), are all based on the Simple Object Access Protocol (SOAP). Throughout the past two decades they became the backbone of Spatial Data Infrastructures (SDI). However, SOAP is an application communication protocol whose development dates back to the 1990s, prior to the emergence of the SW. While many standards and applications came to rely upon SOAP, it is today a largely outdated protocol, that does not tap on the full potential of the internet. The main issues identified by the SDWWG with the SOAP philosophy applied to geo-spatial data can be summarised as:

- URIs are not used to identify spatial resources on the web.

- Modern API frameworks, like the OpenAPI (Miller et al. 2021), are not being used.

- Linked Data is fundamental to the provision of spatial data on the Web.

- SDIs based on OGC web services are difficult to use:

- OGC Web services do not facilitate indexing of their content by search engines.

- By design, catalogue services only provide access to meta-data, not the data themselves.

- It is not possible to access data trough links (e.g. URLs), in most cases it is necessary to construct some kind of query.

- It is often difficult for non-domain-experts to understand and use the data.

To address these issues the SDWWG proposes a five point strategy inspired on the Five Star Scheme:

| * | Linkable: use stable and discoverable global identifiers. |

| ** | Parseable: use standardised data meta-models such as CSV (Shafranovich 2005), XML(Bray et al. 2006), RDF (Schreiber and Raimond 2014), or JSON (Bray 2014). |

| *** | Understandable: use well-known or at least well-documented vocabularies/schemas. |

| **** | Linked: link to other resources whenever possible. |

| ***** | Usable: label your document with a license. |

The SDWWG then goes on to describe a series of best practices towards these five goals. Their aim is to bring geo-spatial data provided by SDIs de facto to the Web. Among those four should be highlighted:

- Best Practice 1: Use globally unique persistent HTTP URIs for Spatial Things.

- Best Practice 2: Make your spatial data indexable by search engines.

- Best Practice 3: Link resources together to create the Web of data.

- Best Practice 12: Expose spatial data through ‘convenience APIs’.

This manuscript guides you through the methods and tools empowering your SDI to do achieve exactly this.

1.3 FAIR Data

Many scientific and industrial fields transitioned from a state of data wanton to data galore during the past decade. In a short period, interpreting and using data became a major concern, as voluminous data sets pile up without use. This problem led to the assembly of a wide consortium of data stakeholders, encompassing academia, industry and government. One of the goals of this consortium was to facilitate automated access and reuse of scholarly data, but in a way that would also ease these tasks to humans. This initiative would eventually lay out what became known as the FAIR principles (Wilkinson et al. 2016).

FAIR stands for Findable, Accessible, Interoperable and Reusable. They can be regarded as a minimum set of standards without which machines and humans are incapable of using a dataset. Note that these go well beyond the concept of “Open Data”. A dataset may be open but not really usable in practice.

Soon enough the FAIR principles were adopted as goals by governments, notably by the European Commission in 2016 (Commission 2016). Still that year these principles were endorsed by the G20 (Leaders 2016). Many similar initiatives ensued, with institutions promoting FAIR principles appearing around the world. FAIR principles became a component of initiatives such as the European Open Science Cloud (Mons et al. 2017) or the European Digital Single Market (Commission 2016). The Go FAIR initiative 2 is perhaps the most visible of these efforts.

1.3.1 The Principles of FAIR

The sub-sections below go through each of the principles, as currently detailed by Go FAIR (FAIR 2022). The process towards compliance with these principles is also known as “FAIRification”.

These principles refer to three types of entities: data (or any digital object), meta-data (information about that digital object), and infrastructure. For instance, principle F4 defines that both meta-data and data are registered or indexed in a searchable resource (the infrastructure component).

1.3.1.1 Findable

The first step in (re)using data is to find them. Meta-data and data should be easy to find for both humans and computers. Machine-readable meta-data are essential for automatic discovery of datasets and services, so this is an essential component of the FAIRification process.

F1. (Meta-)data are assigned a globally unique and persistent identifier.

F2. Data are described with rich meta-data (defined by R1 below).

F3. Meta-data clearly and explicitly include the identifier of the data they describe.

F4. (Meta-)data are registered or indexed in a searchable resource.

1.3.1.2 Accessible

Once the user finds the required data, she/he needs to know how can they be accessed, possibly including authentication and authorisation.

A1. (Meta-)data are retrievable by their identifier using a standardised communications protocol

A1.1. The protocol is open, free, and universally implementable

A1.2. The protocol allows for an authentication and authorisation procedure, where necessary

A2. Meta-data are accessible, even when the data are no longer available

1.3.1.3 Interoperable

The data usually need to be integrated with other data. In addition, the data need to interoperate with applications or workflows for analysis, storage, and processing.

I1. (Meta-)data use a formal, accessible, shared, and broadly applicable language for knowledge representation.

I2. (Meta-)data use vocabularies that follow FAIR principles

I3. (Meta-)data include qualified references to other (meta)data

1.3.1.4 Reusable

The ultimate goal of FAIR is to optimise the reuse of data. To achieve this, meta-data and data should be well-described so that they can be replicated and/or combined in different settings.

R1. (Meta-)data are richly described with a plurality of accurate and relevant attributes

R1.1. (Meta-)data are released with a clear and accessible data usage license

R1.2. (Meta-)data are associated with detailed provenance

R1.3. (Meta-)data meet domain-relevant community standards

1.3.2 The role of Linked Data in FAIR

The FAIR data principles function both as an enabler and a benefactor of Linked Data. They largely overlap with the Five Star data ranking in the aims to make data usable and interconnected. There is thus a confluence of goals highlighting the impact of Linked Data. In the opposite sense, the Linked Data paradigm provides the means to achieve many of the items in the FAIRification process.

It is worth to outline the role of Linked Data in achieving all items in the Interoperable component. By design, I1 to I3 are met by specifications in the Semantic Web. And perhaps more important is R1.3, in which the FAIR data principles acknowledge the need for an ontological or semantic dimension to render data effectively reusable.

Quite how Linked Data achieves these goals is the topic for the coming chapters.

2 The pillars of the Semantic Web

The Semantic Web is an umbrella designation for a collection of specifications issued by the W3C. Starting with the Uniform Resource Identifier (URI), then with the Resource Description Framework (RDF), further with the SPARQL query language, and finally with the Web Ontology Language (OWL). These four specifications are the backbone of the Semantic Web, setting the canonical path to Linked Data.

With time the W3C came to publish many other specifications, that augment or complement the Semantic Web. Some are referred in later stages of this manuscript, others are too specific for a direct reference. But among these is GeoSPARQL, the bridge from the Semantic Web to geo-spatial data. This specification is presented thoroughly in Chapter 6.

2.1 Uniform Resource Identifier

2.1.1 Overview

At face value this section may come out as esoteric and perhaps not the most interesting subject in the context of data exchange and SDIs. Therefore, if you feel you already have a good grasp on what a URI is you might well skip it. However, the concept of a URI is fundamental to the Semantic Web, and to data sharing over the internet in general.

If you worked with a database before, or even with an unstructured dataset like a CSV file, you know the importance of identifying each data element or data record. Usually this is achieved with sequential integers, like the row number in a CSV file or an auto-increment field in a relational database. That works fine to identify data elements that exist within an isolated dataset, but when we consider sharing data over the internet that scheme simply fails.

This is a problem URIs solve: provide unequivocal identifiers that are valid everywhere and every time. They guarantee that no other data on the internet gets mistaken with your own, and that their precise meaning is unambiguous.

Beyond identifying data, URIs serve also as locators, thus performing the crucial role of networking different data and data sources. In essence they are the links in Linked Data.

A Uniform Resource Identifier (URI) is a unique sequence of characters that identifies a logical or physical resource used by digital technologies (Tim Berners-Lee 1994). URIs may be used to identify anything, including real-world objects, such as people and places, concepts, or information resources such as web pages and books.

The URI specification is meant to be hierarchical, it can be further specialised for increasingly bespoke purposes. This section covers two specialisations that are most relevant in the Semantic Web: the URL and the URN. They are primarily used to locate resources on the internet, however, a URL can also locate resources in a file system or in a closed, private network.

2.1.2 Specification

In its most basic form a URI is formed by two character sequences

separated by a colon character (:):

scheme:path (T.

Berners-Lee et al. 1998). The scheme is a string that identifies

a particular protocol used to retrieve the resource. The Hyper Text

Transfer Protocol (HTTP) is the most used, but many others exist (Klyne 2023). In

abstract, it is possible to use any scheme, even an ad hoc one.

The path determines the specific location of the resource using the

scheme declared. The most simple form of organising a path is using the

forward slash character (/) to specify a path through a

hierarchy, similar to the folder structure in a file system (a few

examples in Listing 1).

Listing 1: Abstract URI examples

scheme:path/to/some/resource

scheme:country/state/county/cityThe path can also start with the identification of a host, usually a

network node that makes resources available according to the specified

scheme. In certain schemes, like HTTP, host names are managed and

assigned by an authority. When the URI includes a host name, the path

must begin with a double forward slash (//). A good example

of an authority is the W3C itself, that manages hosts identified by the

string www. Listing 2 shows a URI identifying an hyper-text

document published at the W3C’s website.

Listing 2: URI linking to a HTML document published by the W3C through the HTTP protocol.

https://www.w3.org/Addressing/URL/uri-spec.htmlThe authority assigns the host name to an institution, usually the

host name reflects the name of the institution itself. The institution

is thus responsible for the structuring of the host name into sub-names

(e.g. inspire.ec.europa.eu). In the Semantic Web the host

name in a URI further expresses responsibility for the resource it links

to (e.g. data, semantics).

A further relevant component to the path is the identification of a

fragment, i.e. a particular element or section within a resource. The

fragment is another character string positioned at the end of the URI,

separated from the path with a hash character (#):

scheme://host/path#fragment. A good example is the

identification of a heading within an hyper-text document (Listing

3).

Listing 3: URI linking to the fragment of a HTML document.

https://www.w3.org/Addressing/URL/uri-spec.html#Examples A URI can get considerably more elaborate with the query segment. It

features between the path and the fragment, and is optional as the

latter. The question mark character (?) sets its beginning,

and is then composed by a series of key-value pairs. Each pair is

separated by an ampersand (&) or a semi-colon

(;) , with the pair itself separated by an equal character

(=). Listing 4 shows an example with two key-value pairs.

There is no theoretical limit to the number of pairs the query segment

may include, resulting in long URIs. While it may come across as

cumbersome, the query segment is an important element in passing

information to remote services or applications. The query segment is

rarely employed in the context of the Semantic Web, but it is important

to be aware of its role.

Listing 4: URI including a query segment.

https://www.w3.org/Addressing/URL/uri-spec.html?key1=value1&key2=value2 This was just a brief introduction to the URI specification, it goes far beyond these essential elements. However, the scheme, host name, path and fragment are the most relevant in the Semantic Web. Defining a URI policy for your institution or your own data is an essential task for Linked Data provision. That aspect is tackled in detail in Chapter 7.

2.1.3 Uniform Resource Locator

A Uniform Resource Locator (URL) is a specific type of URI that locates resources in the World Wide Web (WWW) (T. Berners-Lee, Masinter, and McCahill 1994). Moreover, a URL also specifies the means through which that resource may be retrieved, with a known web protocol identified in the scheme segment. This is the most important distinction between a URL and a URI in general.

When you browse the internet, the browser programme usually shows the

web page URL starting with http or https in

what is commonly called an address bar. The most common protocols are

http and https for web pages, ftp

to retrieve files with the File Transfer Protocol and

mailto to e-mail addresses.

The URL makes further use of the domain name concept, put in practice

in the 1980s to identify nodes in a computer network. This is the

segment in a URL path that includes dot characters (.),

corresponding to the broader concept of host name in the general URI

specification. Domain names are translated into the physical addresses

of computer nodes according to the rules of the Domain Name System (DNS)

(Mockapetris 1987).

The URL https://url.spec.whatwg.org/#url-representation

refers to a resource fragment named url-presentation

located in a WWW host node with the domain name

url.spec.whatwg.org that can be retrieved using the

Hypertext Transfer Protocol Secure (HTTPS).

2.1.4 Uniform Resource Name

If a URL locates a digital resource on the WWW, a URN identifies a resource that can not be retrieved through the WWW (Moats 1994). URNs can identify physical objects, logical concepts, processes and any other immaterial assets. The primary function of a URN is to identify unequivocally within the digital world, a thing that is not digital in nature.

As a special URI, a URN distinguishes itself by starting with the

urn schema. Paths in a URN are dominated by namespaces,

that allow their management within a certain domain. Each namespace is

under the management of an authority that determines how the reminder of

the path is employed to function as an identifier. The namespace and the

path are separated by a colon (:). A typical URN assumes

the form urn:namespace:path#fragment. Table 3 provides some

examples.

| URN | meaning |

|---|---|

urn:isbn:0553283685 |

10 digit ISBN code for a book |

urn:ogc:def:crs:EPSG:6.3:26986 |

A coordinate reference system issued by the EPSG and curated by the OGC |

urn:epc:id:imovn:9176187 |

Identifier of a shipping vessel |

urn:lex:eu:council:directive:2010-03-09;2010-19-UE |

A European directive (legislation) |

2.2 Resource Description Framework

The Resource Description Framework was the first standard issued by the W3C towards the Semantic Web. Its primary goal was to facilitate the exchange of data over the internet, independent of particular software makers or underlying operating systems. It went far beyond that goal, laying the seed for a new branch of ontological development in information science.

2.2.1 Triples

The core idea in RDF is to state facts. A simple example of a fact statement would be “my bicycle is black”. This formulation is common to natural language, and is grammatically composed by a subject, “my bicycle”, a predicate “is” and an object, “black”. In RDF all data exist as statements composed by three elements like these, subject - predicate - object. That is why this atomic datum is also called triple. In fact this is one of the oldest approaches to knowledge representation in computer science, with its roots dating back to the dawn of artificial intelligence in the 1960s. Below a few more examples of triples expressed in natural language:

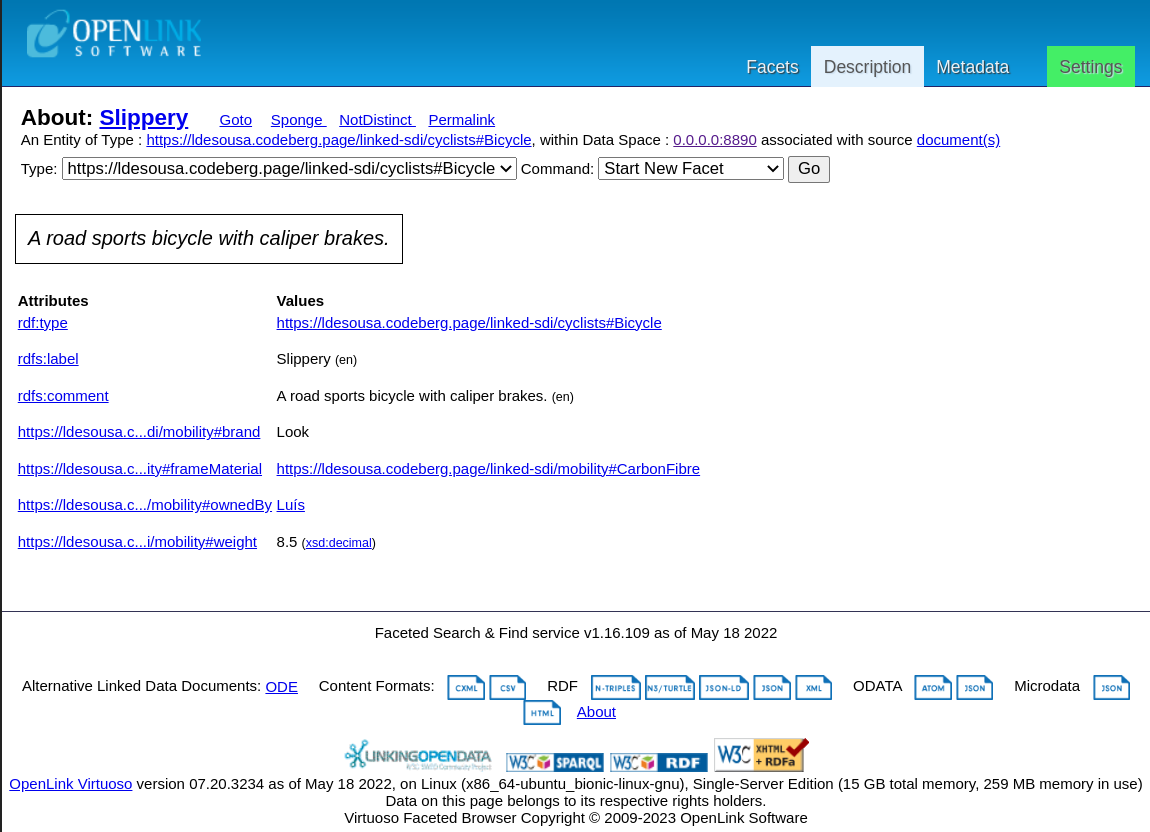

Slippery is a bicycle.

Slippery has caliper brakes.

Slippery weigths 8.5 kg.

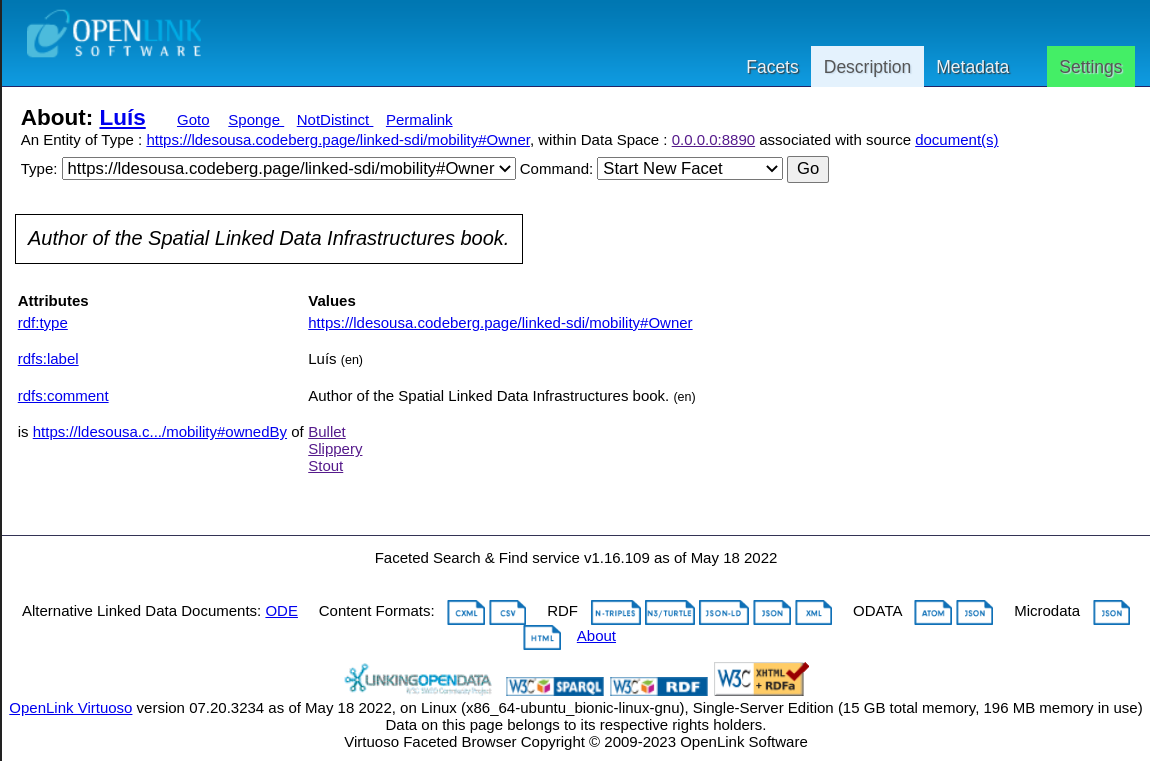

Luís owns Slippery.

Note the text colours in each sentence: red marks the subject, green the predicate and blue the object. These are the same concepts found in the grammars of natural languages. The subject is a “thing”, for instance, a person, a place, an object, an idea. In natural language the subject is the element to which the verb applies, thus determining how the verb is conjugated regarding person and plurality. The predicate identifies everything in a sentence that is not a subject, verb, adjectives, adverbs, etc. But in RDF the predicate has a leaner definition, containing solely the verb, for instance expressing a state, an action, or a relation. And finally there is the subject, which is another “thing”. It is the target of the predicate, the receiver of an action, a specific state or a concrete property. The concept of subject is parallel to natural language, with the important difference that in a RDF triple there is always a subject, whereas it may be absent in human discourse.

The small examples above do not precisely match human speech, which tends to be more informal and often less structured. Someone speaking only with triples like these would likely come out as untoward, borderline alien. But humans understand them, without having to learn new concepts. And here is the power of triples: they are as easily understandable by humans as by computers.

Triplets simplify information and render it objective. From the set of triples above an automated system should be able to answer a question like “How much Luís’ bicycle weights?” Or more complex questions such as “Who owns a bicycle weighting less than 10 kg?”.

But if computers understand triples, the natural languages we humans speak may not be as easy. Natural languages provide different ways to express the same information and are also susceptible to context. Therefore something more formal is necessary to express triples in an ambiguous form to facilitate life for machines. Such is the role of RDF.

2.2.2 Adding in URIs

RDF is a language, composed by a grammar and an alphabet. The grammar sets the rules for its use, the alphabet determines the symbols with which concepts are expressed. If this sounds familiar it is because RDF is indeed inspired on natural languages and how humans organise, or retain knowledge.

The concept of triple is the RDF grammar. As for the symbols, they are either links or literals. The latter are the simpler to explain, they represent concrete and indivisable bits of information. In most cases literals are numbers or strings, they can be more complex, as you will see in later chapters, but for now these are enough. In the set of triples given above, there is only one literal: “8.5 kg”. The subjects and objects “Luís”, “bicycle” and “Slippery” are things (expressed as strings) but not literals. The difference between literals and things will become more evident in Section 3.3.

The second kind of symbol in RDF is thus the link. In practice this means a URI, the reason it was introduction in Section 2.1. Again recalling the example above, all the objects, subjects and predicates that are not literals must be expressed as URIs: “Slippery”, “is a”, “bicycle”, “has”, “calliper brakes”, “Luís” and “owns”. URIs serve two proposes: to locate a thing on the WWW and to provide context, or semantics about that thing. Semantics is particularly important with predicates, for instance to express exactly what “is a” or “weights” means. But also with things, for instance to provide concrete meaning to something like “bicycle”. Thus the expression Semantic Data.

Then how can the triples about “Slippery” be expressed with URIs? Since these triples refer to one of my bicycles, I simply use the URL to the web version of this manuscript. This essentially makes me, the author, responsible for giving meaning to the subjects, objects and predicates, precisely what I want. Selecting the appropriate URI for your data is actually an important step in linked data provision, an aspect reviewed in more detail in Chapter 7. Listing 5 shows the triples about “Slippery” based on the URL to the root document in this manuscript web page, with the fragment identifying each subject, predicate and object.

Listing 5: The triples about the Slippery bicycle expressed with URIs.

http://www.linked-sdi.com#Slippery

http://www.linked-sdi.com#is_a

http://www.linked-sdi.com#bicycle

http://www.linked-sdi.com#Slippery

http://www.linked-sdi.com#has_brakes

http://www.linked-sdi.com#caliper

http://www.linked-sdi.com#Slippery

http://www.linked-sdi.com#weight_kg

8.5

http://www.linked-sdi.com#Luís

http://www.linked-sdi.com#owns

http://www.linked-sdi.com#Slippery Note how some of the predicates have changed, like “has” “caliper brakes” into “has_brakes” and “caliper”. Here you start to see some of the mechanics rendering triples interpretable by machines. In this particular case the actual subject is just “caliper”, and not “caliper brakes”, since the goal of the triple is to identify the type of brakes of that bicycle. For another bicycle then this can be expressed as “has_brakes” and “cantilever”.

Finally, the triples expressed with URIs look considerably harder to read for us humans. That is why different grammars to encode (i.e. to record or write) triples exist, as Section 2.3 details.

2.2.3 From triples to a graph

A final aspect of RDF needs to be highlighted. All the four triples include “Slippery” itself, either as subject or object. All these triples relate to each trough the “Slippery” concept. The predicate in a triple can also be perceived as a connection (or link) between two nodes (subject and predicate). And with a set of inter-connected nodes we get a graph or a network. This is why a set of connected or related triples is also termed a Knowledge Graph.

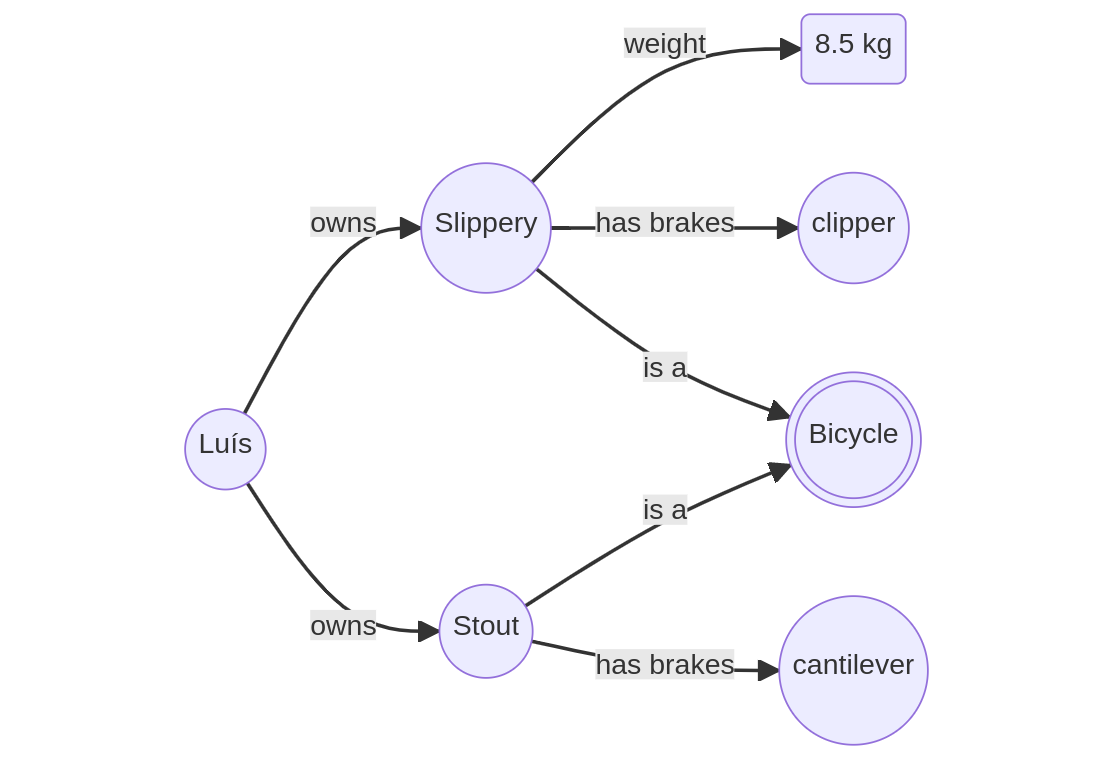

Figure 2 presents the “Slippery” triples in the form of a graph. There are a few extra triples referring to “Stout”, my city bike. This should make evident the idea of data in the Semantic Web building up networks or graphs, vis à vis the traditional flat tables. And even more interesting is the possibility to link these triples to any other triples out there in the web, hence the term Linked Data.

Note in the graph the different type of node used to express the “8.5 kg” literal. “Bicycle” is also expressed differently, with a double circle. That is to denote the difference between things, such as “Slippery” or “Stout” and categories of things. This is where semantics becomes relevant, as Chapter 3 shows in detail.

2.3 Turtle - Terse RDF Triple Language

RDF represents data with sets of interconnected triples that essentially state facts about a particular context. As Listing 5 exemplified, the linked nature of RDF provided by the employment of URIs makes the triples less than readable for humans. This in spite of the triple concept being rather similar to natural speech. A piece of the puzzle is missing: a syntax for the encoding (or expression) of triples. The end result must be something easily approachable by machines as well as humans.

In fact there are various options to this end, mostly specifications from the W3C. This book starts by introducing Turtle, short for the Terse RDF Triple Language (Beckett and Berners-Lee 2011). Of the different syntaxes available this is possibly the best for human consumption. It is also very similar to the syntax of the SPARQL query language (to be seen later in Chapter 5). Turtle is thus the best starting point for an introduction, but it is important to note that any RDF document described in this syntax can be automatically transformed into an alternative syntax.

2.3.1 Syntax basics

2.3.1.1 A triple

Defining a triple with Turtle is not that different from writing the

small sentences in natural language like in the previous section. A

Turtle triple is a sequence of three terms, the subject, the predicate

and the object, each separated by a white space and terminated by a full

stop (.). Some simple examples are given in Listing 6, in

the first line this is the subject, is_a the

predicate and triple the object. Triples always obey this

sequence in the Turtle syntax.

Listing 6: Simple triples expressed with the Turtle syntax.

this is_a triple .

another is_a triple .This simple syntax is unlikely to ever produce any notable literary work, but it is easily readable by humans and interpretable by machines. A collection of these simple triples can gather a great deal of information.

2.3.1.2 URIs

But this is the Semantic Web, triple elements cannot be this simple,

they must either identify a resource or represent literals. Enter URIs

then. Turtle treats them as special citizens, enclosed within the

lower-than and greater-than characters (< and

>), for example:

<http://example.org/path/>. Listing 7 lays down the

same triples presented in Listing 6 but with URIs pointing to a

fictitious document. URI fragments differentiate the various

resources.

Listing 7: Triples expressed with URIs in the Turtle syntax.

<http://other.example.org/path#this> <http://example.org/path#is_a> <http://example.org/path#triple> .

<http://other.example.org/path#another> <http://example.org/path#is_a> <http://example.org/path#triple> .URIs can also be relative references. Starting a URI directly with

the hash characters translates into a reference within the same document

or resource. For instance, the this and

another subjects could be referenced within the fictitious

document at http://other.example.org/path document defining

them as <#this> and

<#another>.

2.3.1.3 URI abbreviations

While the URI is a corner stone of the semantic web, by providing unique identifiers and the “linked” in “linked data”, they also make the encoding of RDF triples verbose and harder to read by humans. Moreover, URIs identifying resources within a same document are for the best past identical, they usually share the same URI schema and path, differing solely in the URI fragment. Full URIs not only clutter RDF documents, they also carry a good deal of redundancy.

Turtle deals with this problem in an elegant way, providing means for

the abbreviation of URIs. At the beginning of the document it is

possible to declare a particular string as an abbreviation for the lead

segment of an URI (usually the scheme and the path). This is made by

encoding a special triple with the keyword @prefix as the

subject, the abbreviation followed by the colon character

(:) as predicate and the abbreviated URI as object (Listing

8).

Listing 8: A URI abbreviation expressed in the Turtle syntax.

@prefix expl: <http://example.org/path#> .With the abbreviation defined, triple elements can be expressed in a

much leaner and readable way,

<http://example.org/path#this> becomes simply

expl:this. It is also possible to declare an empty

abbreviation, using solely the colon character as predicate. Empty

abbreviations are useful to shorten even further references to resources

within the same document. For instance with the abbreviation

@prefix : <http://example.org/path#> . the same

object can be expressed as :this.

The example in Listing 9 shows a full Turtle document that comes

closer to the way RDF is usually presented in this syntax. Abbreviations

are used as prefixes followed by the colon character and then the

resource name or identifier. A programme interpreting a Turtle document

automatically replaces the abbreviation followed by the colon with the

abbreviated URI. The URI segment figuring in the abbreviation

(e.g. http://example.org/path#) is also referred as

namespace.

Listing 9: A simple RDF document expressed in the Turtle syntax.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

:this expl:is_a expl:triple .

:another expl:is_a expl:triple .Note that in Listing 9 the predicate expl:is_a and the

subject expl:triple are defined in a different document.

Again, these RDF examples are still missing proper semantics, the topic

for Chapter 3.

2.3.1.4 Literals

The previous few sections were focused on the expression and location of resources with URIs, However, at some point data needs to come down to concrete information bits. In the Semantic Web and beyond these are known as literals. For the best part they are numbers and alfa-numeric strings, but there no actual limits to their nature.

In the Turtle syntax literals are always represented between double

quotes, for instance "triple name". Numbers are represented

in the same way, they do not differ from strings. Long strings

containing line breaks must be flanked by three double quote characters

("""). Listing 10 provides some basic examples.

Listing 10: Some RDF literals expressed in the Turtle syntax.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

:this expl:is_a expl:triple .

:this expl:length "4" .

:this expl:name "This triple example" .

:this expl:description

""" This is a long string describing the triple :this

and also examplifying the encoding of long strings. """ .2.3.1.5 Literal suffixes

Suffixes can be used to further specify the nature of literals. There are two kinds, suffixes declaring a language and suffixes declaring a literal type. They cannot be used together, as a language suffix only applies to strings.

Language suffixes are expressed with the at character

(@) followed by a language tag. Whereas the Turtle

specification itself does not make the nature of these tags explicit, it

is good practice to use the two character code list from the ISO 639-1

standard (“Codes for the representation of names of languages—Part

1: Alpha-2 code” 2002). Some examples are given in

Listing 11

Listing 11: Literal suffixes expressing the language of literals in the Turtle syntax.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

:this expl:name "This triple example"@en .

:this expl:name "Ceci c'est un triple"@fr .To specify a type other than string two circumflex characters

(^^) are used, followed by an URI locating the desired

definition. This URI can point to a particular type defined within an

ad hoc RDF document, or to one of the basic types identified in

the XML schema specification (Biron and Malhotra 2004). In any of

the cases, URI abbreviations can be used to declutter the encoding

(Listing 12). In Section 3.3.5 the literal types specified by the XML

schema are reviewed in more detail.

Listing 12: Literal suffixes expressing literal types in the Turtle syntax.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

@prefic xsd: <http://www.w3.org/2001/XMLSchema#> .

:this expl:length "4"^^xsd:integer .

:this expl:type "short"^^<http://example.org/path#tripleType> .2.3.1.6 Comments

Comments can be introduced anywhere in a Turtle document. They can be

used to identify the document author, its purpose, relation to other

documents, etc. They can annotate certain elements and provide cues on

their meaning. A comment is inserted with the hash character

(#), whatever is written after it in the same line is

ignored. E.g. # This is a comment.

2.3.2 Triple Abbreviations

One of the goals in the Turtle syntax is to declutter the encoding of triples. Earlier you saw how abbreviating URIs helps in achieving an easily readable RDF document. But abbreviations go further, with Turtle it is possible to abbreviate triples themselves.

2.3.2.1 Abbreviate subject and predicate

Data in digital form are often formed by sets of characteristics

describing a certain object. Just like each row in a CSV file or

database table provide diverse information bits related to a same

entity, object, person, etc. Such kind of data translates into RDF with

sets of triples with the same subject, or even with the same subject and

predicate, take for instance Listing 13. Turtle allows the abbreviation

of this kind of triples, instead of declaring only one object for the

subject–predicate pair, the comma character (,) can be use

to encode a list of different objects. Listing 14 gives an example that

encodes the exact same triples as Listing 13. It is common to declare

each subject in its own line, to ease reading further.

Listing 13: Example RDF triples with repeated object and predicate.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

:this expl:is_a expl:triple .

:this expl:is_a expl:example .

:this expl:is_a expl:simple .Listing 14: Example RDF triples with abbreviated object and predicate in the Turtle syntax.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

:this expl:is_a expl:triple ,

expl:example ,

expl:simple .2.3.2.2 Abbreviate subject

A similar strategy is used to abbreviate triples that share the same

subject (but not the same predicate). In this case the semi-colon

character (;) is used to provide a list of predicate–object

pairs. Consider again Listing 12, that provided the example with

literals. Since both triples have the same subject, they can be

abbreviated as Listing 15 shows. Note again the practice of encoding

each predicate–object pair in its own line.

Listing 15: Example RDF triples with abbreviated object in the Turtle syntax.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

@prefic xsd: <http://www.w3.org/2001/XMLSchema#> .

:this expl:length "4"^^xsd:integer ;

expl:type "short"^^expl:tripleType .2.3.3 Collections

The RDF standard specifies a special kind of object for lists of

things: the collection. It is a recursive construct, the first element

is declared with the rdf:first predicate and the remainder

as a sub-collection, using the rdf:rest predicate. With all

elements declared, the rdf:nil predicate is used to close

the collection. In the Turtle syntax, lists are enclosed with square

brackets ([ and ]) with individual elements

separated by a semi-colon (;). Listing 16 shows an

example.

Listing 16: RDF triples encoding a collection in the Turtle syntax.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

:this expl:has_list [ rdf:first "Element A";

rdf:rest [ rdf:first "Element B";

rdf:rest [ rdf:first "Element C";

rdf:rest rdf:nil ] ] ] .That is a good deal of text to declare a list composed by three

simple literals. Turtle thus allows abbreviating collections further, by

directly enclosing elements in brackets (( and

)), separated solely by empty spaces. Listing 17 below

encodes exactly the same information as Listing 16 but is far more

readable. The tautology from this syntax is that ( ) is an

abbreviation for rdf:nil.

Listing 17: RDF triples enconding a collection in Turtle with abbreviated syntax.

@prefix : <http://other.example.org/path#> .

@prefix expl: <http://example.org/path#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

:this expl:has_list ( "Element A" "Element B" "Element C" ) .2.3.4 Blank nodes

Look carefully again at Listing 16. Perhaps you noticed before, inside the square brackets there are no triples, but rather doubles. How can that be? They are in fact triples but their object is invisible, what in the Semantic Web is known as a Blank Node. The concept of blank node is general to RDF but is perhaps best exemplified with the Turtle syntax. In essence it is a shortcut to lean out and simplify documents. The blank node results from a collection of triples referring all to the same subject. For the sake of brevity, RDF allows the expression of such triples without explicitly declaring the subject. The programme that later reads the RDF is then responsible for creating a logical identifier for the subject.

With the Turtle syntax, a blank node is declared within a square

brackets block. Inside the block are pairs of predicates and subjects,

separated by a semi-colon (;). Listing 18 provides an

example. The usefulness of blank nodes will become more evident once you

learn how to specify an ontology (Chapter 3).

Listing 18: Blank nodes in the Turtle syntax.

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-path#> .

@prefix dc: <http://purl.org/dc/elements/1.1/> .

@prefix ex: <http://example.org/stuff/1.0/> .

<http://www.w3.org/TR/rdf-syntax-grammar>

dc:title "RDF/XML Syntax Specification (Revised)" ;

ex:editor [

ex:fullname "Dave Beckett";

ex:homePage <http://purl.org/net/dajobe/>

] .2.3.5 The “Slippery” example

Closing this Section, Listing 19 presents the “Slippery” triples again, but in the Turtle syntax. The same facts are stated again with URIs, but in a way that almost resembles the initial natural language statements. Machine and human readable.

Listing 19: The triples about the Slippery bicycle expressed in the Turtle syntax.

@prefix : <http://www.linked-sdi.com#> .

:Slippery :is_a :bicycle ;

:has_brakes :caliper ;

:weight_kg "8.5" .

:Luís :owns :Slippery . 2.4 Other RDF syntaxes

The Turtle syntax is one of many specified the past decades. This

section provides brief examples of alternative syntaxes that are also

relevant. They are not presented in detail like Turtle, it is instead

important to retain their existence. Do not shy away if you come across

RDF triples in what appears a foreign syntax. An online tool like the

one provided by isSemantic.net 3 can

easily translate to a familiar syntax. For the remainder of this

manuscript only Turtle will be used, as at present this is possibly the

leanest and easier to read by humans.

2.4.1 RDF/XML

RDF was initially specified on a XML syntax, first published by the

W3C in 2001 and updated several times up to version 1.1 released in 2014

(Gandon and Schreiber

2014). This syntax is today better known as RDF/XML. It is not

the most user friendly syntax and also rather verbose. RDF documents are

encoded as series of rdf:Description sections, each

reporting triples for a single subject. The latter is identified with

the rdf:about annotation in the opening section statement.

Each triple predicate translates into an independent statement within

the rdf:Description section

(e.g. <has_brakes in Listing 20). Objects of the type

resource are encoded with a rdf:resource annotation,

whereas literals get their own statement (e.g.

<weight_kg> in Listing 20). RDF/XML introduced the

concept of annotations (and namespaces) to RDF encoding, in that sense

helping to lean documents. However, with the formal sections and

statements and the full encoding of subject URIs it can still produce

rather cluttered documents for human eyes. Listing 20 encodes the

Slippery triples originally given in Listing 19. Compare the size of

both documents.

Listing 20: The triples about the Slippery bicycle expressed in the RDF/XML syntax.

<?xml version="1.0" encoding="UTF-8"?>

<rdf:RDF

xmlns="http://www.linked-sdi.com#"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

>

<rdf:Description rdf:about="http://www.linked-sdi.com#Slippery">

<weight_kg>8.5</weight_kg>

<has_brakes rdf:resource="http://www.linked-sdi.com#caliper"/>

<is_a rdf:resource="http://www.linked-sdi.com#bicycle"/>

</rdf:Description>

<rdf:Description rdf:about="http://www.linked-sdi.com#Luís">

<owns rdf:resource="http://www.linked-sdi.com#Slippery"/>

</rdf:Description>

</rdf:RDF>2.4.2 N-Triples

N-Triples is a syntax developed about a decade ago, in parallel to

Turtle. Whereas the former intended to make RDF succinct and easy to

read by humans, N-Triples targeted ease of read by machines (Beckett, Carothers, and

Seaborne 2014). The concept is simple, each line corresponds to a

triple, with subject, predicate and object separated by a blank space

and URIs delimited by lower and greater characters (<

and >). A full stop (.) marks the end of a

triple. To a human the N-Triples looks very much like Turtle, but

without abbreviations. While N-Triples indeed facilitates triple

encoding/decoding by software it is also one of the most verbose RDF

syntaxes. Listing 21 presents again the Slippery triples in this

syntax.

Listing 21: The triples about the Slippery bicycle expressed in the N-Triples syntax.

<http://www.linked-sdi.com#Slippery> <http://www.linked-sdi.com#has_brakes> <http://www.linked-sdi.com#caliper> .

<http://www.linked-sdi.com#Slippery> <http://www.linked-sdi.com#weight_kg> "8.5" .

<http://www.linked-sdi.com#Slippery> <http://www.linked-sdi.com#is_a> <http://www.linked-sdi.com#bicycle> .

<http://www.linked-sdi.com#Lu\u00EDs> <http://www.linked-sdi.com#owns> <http://www.linked-sdi.com#Slippery> .2.4.3 JSON-LD

Soon after N-Triples and Turtle the W3C published yet another RDF

syntax, this time with web programming in mind. JSON-LD (Sporny et al. 2020) is a

RDF syntax leveraged on the JSON file format, thus directly translatable

to assets like objects and lists in programming languages such as

JavaScript or Python. A JSON-LD is usually outlined with two sections,

one with the context (@context object) encoding

abbreviations, and another for the actual triples (@graph

object). A JSON object is created for each subject, with respective

predicates and objects encoded as dictionaries (i.e. key-value pairs).

List may be used to link more than one subject with the same predicate.

Visually, JSON-LD is not a cluttered syntax (e.g. compared with

RDF/XML), but carries many bracket and curly bracket characters that can

make for a challenging read, especially in longer documents. Listing 22

provides an impression of this syntax for the Slippery triples with a

few exemplary abbreviations. JSON-LD makes extensive use of the JSON

specification, with plenty of alternatives for special cases. Among

other things, it is possible to define object-specific context sections,

that apply to a single subject. For the purpose of this manuscript this

early contact with JSON-LD is enough, however, if you ever intend to

work with RDF in a programming context a deeper understanding of this

syntax may come handy, especially in a web oriented environment.

Listing 22: The triples about the Slippery bicycle expressed in the JSON-LD syntax.

{

"@context": [

{"is_a": "http://www.linked-sdi.com#is_a"},

{"has_brakes": "http://www.linked-sdi.com#has_brakes"},

{"weight_kg": "http://www.linked-sdi.com#weight_kg"},

{"owns": "http://www.linked-sdi.com#owns"}

],

"@graph": [

{

"@id": "http://www.linked-sdi.com#Slippery",

"is_a": "http://www.linked-sdi.com#bicycle",

"has_brakes": "http://www.linked-sdi.com#caliper",

"weight_kg": "8.5"

},

{

"@id": "http://www.linked-sdi.com#Luís",

"owns": [{

"@id": "http://www.linked-sdi.com#Slippery"

}]

}]

}2.4.4 Notation3

Just a few years after starting the development of the Turtle syntax, the W3C housed its evolution into what became known as Notation3 (Tim Berners-Lee and Connolly 2011) (or N3 for short). This syntax expanded on Turtle aiming to facilitate further the expression of lists, logics or variables. Since it expands on Turtle, the triples in Listing 19 would not look any different in Notation3. However, Notation3 is well worth mentioning for it is actually an attempt to expand on RDF itself. It proposes new concepts such as functional predicates or literals that express whole graphs. Perhaps due to these ambitious goals, N3 never made it to an actual W3C recommendation, and has not been updated since 2011. However, it can be the root for new developments in the Semantic Web, that will be relevant to follow upon.

2.5 RDF Schema

From the onset the W3C meant to lend a semantic dimension to RDF. Accompanying the RDF specification the W3C also developed the RDF Schema Specification (RDF Schema or RDFS for short) (Brickley and R. V. Guha 1999). This specification had several goals: provide a best practice for the general structure of knowledge graphs, to standardise the linkage between knowledge and resources and be the basis for the semantic expression of RDF.

RDFS is a relatively compact set of general classes or categories of resources plus a set of predicates. All these resources are defined in two RDF documents maintained by W3C:

http://www.w3.org/1999/02/22-rdf-syntax-path#(abbreviated tordf:)http://www.w3.org/2000/01/rdf-schema#(abbreviated tordfs:)

The most relevant are briefly described next.

2.5.1 Categories

RDFS specifies a set of categories of resources, creating an elementary framework to differentiate objects (and subjects) in a knowledge graph. It considers the distinction between a resource proper and a literal, and some more. The most relevant are:

rdfs:Resource: the category of all things, as everything in RDF is a resource.rdfs:Class: specifies a particular category of resources. The meaning of the term Class is explained in detail in Section 3.2.rdfs:Literal: everything which is not a resource identifier, in most cases strings and numbers. Literals may have a type.rdfs:Datatype: the category of all literal types.

2.5.2 Predicates

A small, but powerful, set of predicates provides basic mechanisms to link to other resources and knowledge graphs in a standardised way. It also adds basic constraints to the formation of triples. Those deserving to be highlighted at this stage are:

rdfs:domain: used to specify the category of the object in a triple.rdfs:range: used to specify the category of the subject in a triple.rdf:type: declares a particular resource as being an element of a category. Can be abbreviated further toa.rdfs:label: provides a human readable name for a resource.rdfs:comment: annotates a resource with a human readable description.rdfs:seeAlso: links a resource to another resource that provides more information, or that is somehow related.rdfs:isDefinedBy: links a resource that defines the subject further.

2.5.3 The “Slippery” example

The simple example with the “Slippery” bicycle is again a good case

to show how RDFS can be used. Listing 23 expands on Listing 19 with the

addition of various triples that start making this knowledge graph truly

Linked Data. Take some time to study all the new triples.

Bicycle is now defined as a category of resources (or

things), with name, a brief description and a link to an external

resource, in this case a Wikipaedia page. Slippery is also

upgraded, with a formal name and description and a link back to its

owner. Note also the use of the a predicate to define

Slippery as a Bicycle.

Listing 23: The triples about the Slippery bicycle expanded with RDFS.

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-path#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix : <http://www.linked-sdi.com#> .

:Bicycle rdf:type rdfs:Class ;

rdfs:label "Bicycle" ;

rdfs:comment

"""A light-weight, pedal-powered vehicle

with two wheels attached to a frame,

one after the other.""" ;

rdfs:isDefinedBy <https://en.wikipedia.org/wiki/Bicycle> .

:Slippery a :Bicycle ;

rdfs:label "Slippery" ;

rdfs:comment

"A road sports bicycle with caliper brakes." ;

rdfs:seeAlso :Luís ;

:has_brakes :caliper ;

:weight_kg "8.5" .

:Luís :owns :Slippery . In a real knowledge graph it would be necessary to define the meaning

(i.e. semantics) of the predicates :has_brakes,

:owns, etc., and also the objects :Luís and

:caliper. If you never worked with data semantics before

things may start to seem too abstract at this stage. Fear not, Chapter 3

provides a thorough introduction to the Web Ontology Language, the W3C

standard that built on RDFS towards rich data semantics.

3 Web Ontology Language

The previous chapters were concerned with the syntax of Linked Data. The basic ways to represent data with RDF and the core standards making data “linked” on the internet. This chapter now dives into the semantics, how data is invested with meaning that is formalised and therefore unequivocal. That is the role of ontologies, abstractions of the real world synthesising concepts humans employ in natural discourse. The Web Ontology Language is the keystone of the Semantic Web, as it fulfils this capital role of formalising semantics, i.e. the meaning and intent of each datum.

This is the most abstract chapter in this book, and could be the most challenging for some readers. While you may never need to develop an ontology, at the end of the chapter you should be able to identify the set of ontologies relevant to a particular domain and how to apply them correctly. Understanding the basics of OWL and how to use ontologies is also crucial for geo-spatial data in the Semantic Web and the associated meta-data.

3.1 Historical Notes

Industries understood the power of computers to process data soon after World War II. Business oriented hardware and software proliferated throughout the 1950s, at first without coordination between vendors. In 1959, several companies in the United States assembled a consortium around CODASYL (Conference on Data Systems Languages) with the purpose of defining a programming language for data systems that could be executed on multiple hardware platforms. One of the results was the COmmon Business-Oriented Language (COBOL) programming language. If at first it had little clout with the software industry, once it was made mandatory by the United States government (Ensmenger 2012) it became a de facto standard.

Data stored by computers those days amounted to little more than collections of files, each storing a set of records. Soon enough industrial and governmental information systems grew in complexity past such simple structures. As the 1970s dawned, various attempts emerged towards more abstract and complex ways to represent data stored by computers. Eventually, (Chen 1976) proposed the Entity-Relationship (ER) meta-language, that finally broke in as a popular choice. Entity is something that exists, a being or a particular unit, relationship is a connection or association. ER is a graphical language, providing constructs to express categories of data, their attributes and the relationships between categories (Figure 3). While simple, ER completely abstracts the description of data from the underlying software or hardware.

Chen’s choice of words was not at hazard. In 1970, Codd had introduced the concept of “relational database”, defining rules for data management software that went beyond earlier file-based systems (Codd 1970). The first implementation of Codd’s vision was released in 1976 by IBM, the Multics Relational Data Store (Van Vleck 2023). In 1979, a small company named Relational Software released a relational database management system named Oracle, which grew enough to even take over the name of the company. ER and relational databases proved a perfect match, providing the theoretical and practical facets to data management. Together they swept the software industry and computer science curriculae.

In 1967, researchers at the Norwegian Computing Centre introduced a language for computer simulation – Simula – that included the concepts of objects, classes of objects and class inheritance (these are explored in more detail in the following section) (Dahl and Nygaard 1966). Simula was not a success with the industry, but proved immensely influential on subsequent programming languages. In 1980, Smalltalk was released, product of an effort at Xerox towards an educational programming language (Goldberg and Robson 1983). Smalltalk not only adopted the concept of objects from Simula, it made them its central paradigm (a sample programme is in Listing 24). By the middle of the 1980s the introduction of industry grade languages like C++ and Eiffel made object-oriented programming a staple of software development.

Listing 24: A sample programme in the Smalltalk language. Declares a class with a method to print a message, then instanciates the class and invoques its method.

Object subclass: #Hello

instanceVariableNames: ''

classVariableNames: ''

package: 'SmalltalkExamples'

Hello>>sayHello

Transcript show: 'Hello World!'

Hello new sayHelloAt the dawn of the 1990s a more fundamental understanding of software development came about. First Powers (Powers 1991) and then Gruber (Gruber 1995) proposed the direct application of Ontology to computer science. The term ontology became first popular within the artificial intelligence community and later in computer science to signify an abstract representation of real-world concepts pertaining to a particular domain or field.

The rapid growth of object-oriented programming fuelled the demand for novel abstract means to develop and document software. Rumbaugh et al. (Rumbaugh et al. 1991) and Booch (Booch et al. 2008) proposed the earliest infrastructures towards this end. Reunited under the Rational Software Corp. these and other researchers would develop such concepts into the Unified Modelling Language (UML). UML matched object-oriented programming just as ER had matched relational databases two decades earlier. But UML is a far more powerful and extensive language, allowing the abstraction of a wide range of constructs, such as class inheritance and composition, all with an expressive graphical meta-language. UML largely provided the infrastructure for applied philosophy envisioned by Powers and Gruber. UML was adopted as a standard by the Object Management Group (OMG) in 1997, at a time when it already featured at large in computer science curriculae.

At the turn of the 21st century, the UML standard was pushed into an even higher level of abstraction. In 2003, the IEEE Software journal published a series of articles advocating a novel software development method named Model-Driven Development (MDD) in which domain models are the primary products, and source code is a by-product Selic (2003). This idea was not entirely new, as various companies had since the 1980s proposed software to generate source code from graphical models (commonly known as CASE tools). What MDD brought anew was the extension of UML into meta-modelling, using abstractions such as categories of categories to capture the essential aspects of a knowledge domain. A broader discipline covering MDD, CASE tools and more became known as Model-Driven Engineering (MDE) (Da Silva 2015).

In 2005, UML version 2.0 was released, including an entire infrastructure (primitives and methods) dedicated to meta-modelling named Model-Driven Architecture (MDA) (Soley et al. 2000). With MDA, the core UML primitives can be specialised through a special primitive: the stereotype. A semantically related set of stereotypes can be gathered into a UML Profile, thus constituting a domain-specific lexicon, i.e. an ontology. MDA was almost immediately adopted by the industry and has since been used by various institutions to issue standards. Noteworthy are those issued by the Open Geospatial Consortium (OGC), many of which were also adopted by ISO. The INSPIRE domain model is also specified with the MDA infrastructure.

In parallel to the efforts of the OMG, the World Wide Web Consortium (W3C) also worked towards an ontology infrastructure. The W3C was primarily concerned with the exchange and automatic processing of data in the age of the internet. It started by specifying the RDF Schema, encompassing basic ontological notions such as category (class), property (domain, range, etc) and inheritance (sub-class).

When the first full RDF specification was released in 2004, the W3C had already started working on a more abstract infrastructure for meta-modelling. With a purposeful name, Web Ontology Language, and a catchy acronym, OWL, it presented a novel approach to ontology modelling (McGuinness, Van Harmelen, et al. 2004). OWL is not as abstract as UML, resulting from a process focused on the practical aspects of data exchange over the internet. The Semantic Web is yet to reach the ubiquity of UML and MDA, but as Chapter 1 outlined, modern requirements for data exchange might well change that picture.

3.1.1 Terminology

Before moving on to the theory it is important to pin down the terminology around Ontology employed in this manuscript. The definitions below large match the common interpretation of these terms in computer science. If something is not yet fully clear do not worry, the subsequent sections have the details.

Ontology: written with capital “O” refers to the branch of Metaphysics providing the general concepts used to abstract information in computer science.

ontology or information ontology: written with small “o” refers an abstract representation of a real world domain, using Ontology principles, and applicable in computer science. A ER or a UML model can be examples.

web ontology: an ontology (with small “o”) expressed with the Web Ontology Language.

3.2 Ontology

Sometime in the V century BC the Greek philosopher Parmenides wrote a poem. It was possibly titled “On Nature”, delving into broad questions on how humans perceive and interpret the reality around them. Much of the text was lost in the subsequent millennia, but Parmenides’ impact on the emergence of Ontology as a novel branch of Metaphysics prevails to this day. Eventually it would have a decisive impact on computer science, as Section 3.1 laid out.

Ontology has underwent twists and turns through history and retains a myriad of unresolved dissensions. It is therefore important to realise that the concepts absorbed in computer and information science are not universally accepted within the Ontology discipline itself. However, they enclose the metaphysical principles supporting the development and modelling of information ontologies.